Tf-Explain: (and read docs & read API)

A TF 2+ Keras based package (3rd party) for EXPLAINING how your network (CNN) is working via visualization

>>>> try out colab (local jupyter notebook , python file --will need to modify appropriately) showing the creation of various visualiation images shown below - in the model already exists case

tf-explain implements methods you can use at different levels:

-

CASE1: on a loaded model with the core API (which saves outputs to disk)

#general example

# Import explainer

from tf_explain.core.grad_cam import GradCAM# Instantiation of the explainer

explainer = GradCAM()# Call to explain() method

output = explainer.explain(*explainer_args)# Save output

explainer.save(output, output_dir, output_name)

-

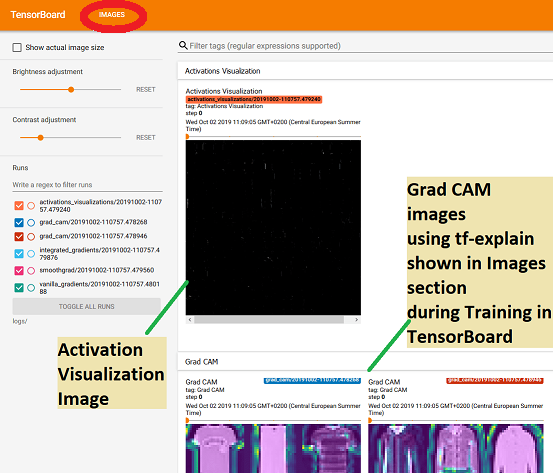

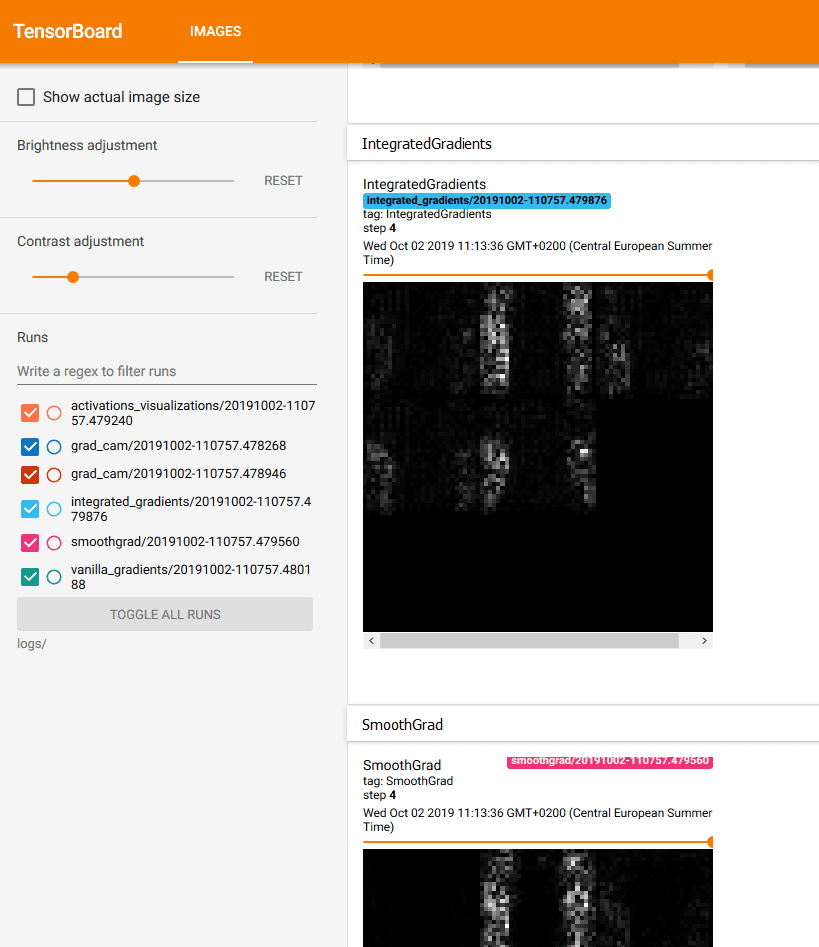

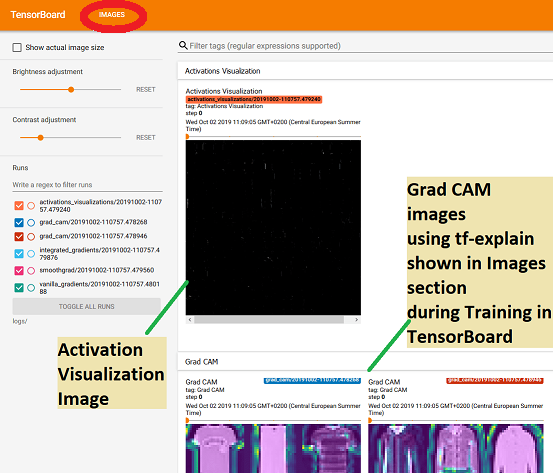

CASE2: at training time with callbacks (which integrates into Tensorboard)

Then, launch Tensorboard and visualize the outputs in the Images section.

#genral example

from tf_explain.callbacks.grad_cam import GradCAMCallback

model = [...] #not showing creation of model

callbacks = [ GradCAMCallback( validation_data=(x_val, y_val), layer_name="activation_1", class_index=0, output_dir=output_dir ) ] model.fit(x_train, y_train, batch_size=2, epochs=2, callbacks=callbacks) -

Example Tensorboard display of Activation Vis Image and Grad CAM Image (see below for details about what these are)

>>> FYI, tf-learn uses TF.GradientTape which records different operations when processing an image through your CNN

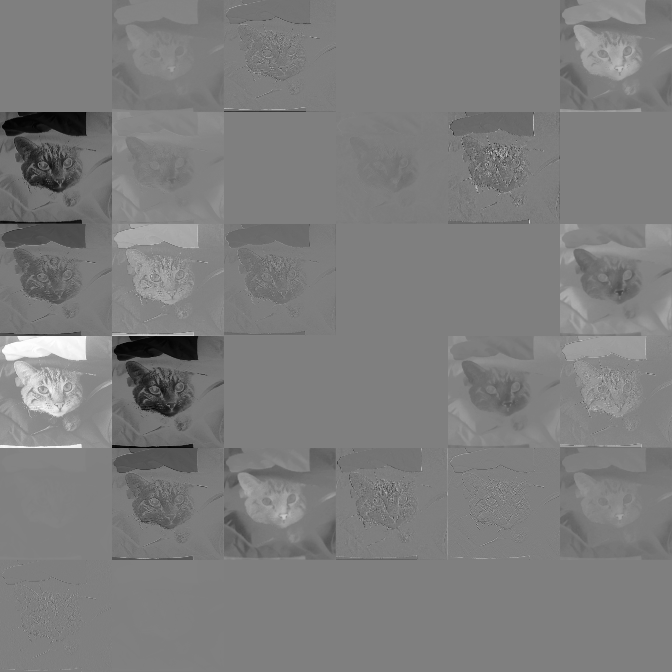

Activation Vizualization

For a Layer in a CNN visualizes how an input is processed through that layer and what each subsequent feature map looks like.

Here is what an Activation Map (visualization) looks like for the MobileNet CNN classifier model that comes with TF 2+

-

Creation if already have existing Model

for i in range(len(IMAGE_PATHS)): each_path = IMAGE_PATHS[i] index = indices[i]img = tf.keras.preprocessing.image.load_img(each_path, target_size=(224, 224)) img = tf.keras.preprocessing.image.img_to_array(img) data = ([img], None) # Define name with which to save the result as name = each_path.split("/")[-1].split(".jpg")[0]#LOAD your model --here we are just loading VGG16 that is included with TF2+ model = tf.keras.applications.vgg16.VGG16(weights='imagenet', include_top=True)# Compute Occlusion Sensitivity for patch_size 20 explainer = OcclusionSensitivity() grid = explainer.explain(data, model, index, 20) explainer.save(grid, '.', name + 'occlusion_sensitivity_20_VGG16.png') -

Callback during training

from tf_explain.callbacks.occlusion_sensitivity import ActivationsVisualizationCallback model = [...] #where you are defining your model --code not shown callbacks = [ ActivationsVisualizationsCallback( validation_data=(x_val, y_val), layers_name=["activation_1"], output_dir=output_dir, ) ] model.fit(x_train, y_train, batch_size=2, epochs=2, callbacks=callbacks)

CAM - class activation mapping and various versions (Grad-CAM)

class activation mapping visualizes areas of image that contribute more to the network decision/activation

Here is the original image and the Grad-CAM visualization image (ignore size difference as input to CNN is resized anyways). This is for the VGG16 CNN classifier model that comes with TF 2+

|

|

-

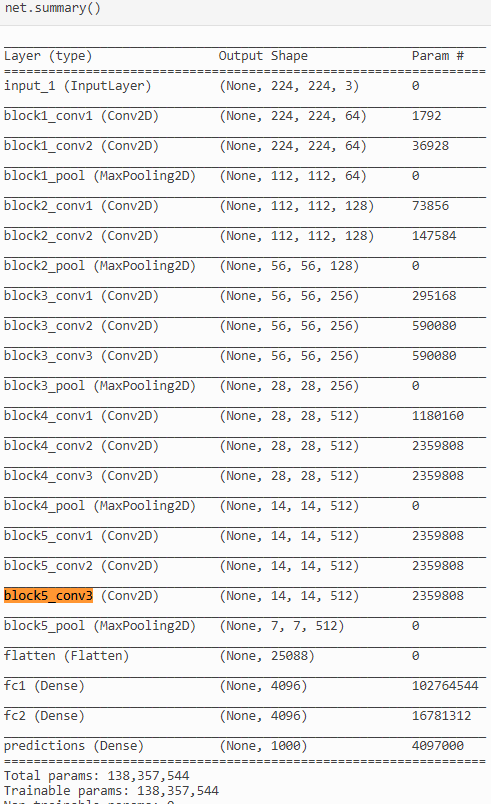

Creation if already have existing Model--note you must know the name 'bock5_conv3' for the model here VGG16 that comes with TF2+. See the summary of this network. It is the last convolutional layer of the network.

for i in range(len(IMAGE_PATHS)): each_path = IMAGE_PATHS[i] index = indices[i]img = tf.keras.preprocessing.image.load_img(each_path, target_size=(224, 224)) img = tf.keras.preprocessing.image.img_to_array(img) data = ([img], None) # Define name with which to save the result as name = each_path.split("/")[-1].split(".jpg")[0]#LOAD your model --here we are just loading VGG16 that is included with TF2+ model = tf.keras.applications.vgg16.VGG16(weights='imagenet', include_top=True)# setup for Grad-CAM explainer = GradCAM() grid = explainer.explain(data, model, 'block5_conv3',index)

explainer.save(grid, '.', name + 'grad_cam_VGG16.png') -

Callback during training

from tf_explain.callbacks.occlusion_sensitivity import GradCAMCallback model = [...] #where you are defining your model --code not shown callbacks = [ GradCAMCallback( validation_data=(x_val, y_val), layer_name="activation_1", class_index=0, output_dir=output_dir, ) ] model.fit(x_train, y_train, batch_size=2, epochs=2, callbacks=callbacks)

Occlussion Sensitivity Visualization Images

Visualize how parts of the image affects neural network's confidence by occluding parts iteratively.

PATCH 10 |

PATCH 20 |

-

Creation if already have existing Model

for i in range(len(IMAGE_PATHS)): each_path = IMAGE_PATHS[i] index = indices[i]img = tf.keras.preprocessing.image.load_img(each_path, target_size=(224, 224)) img = tf.keras.preprocessing.image.img_to_array(img) data = ([img], None) # Define name with which to save the result as name = each_path.split("/")[-1].split(".jpg")[0]#LOAD your model --here we are just loading VGG16 that is included with TF2+ model = tf.keras.applications.vgg16.VGG16(weights='imagenet', include_top=True)# Compute Occlusion Sensitivity for patch_size 20 explainer = OcclusionSensitivity() grid = explainer.explain(data, model, index, 20) explainer.save(grid, '.', name + 'occlusion_sensitivity_20_VGG16.png') -

Callback during training

from tf_explain.callbacks.occlusion_sensitivity import OcclusionSensitivityCallback model = [...] #where you are defining your model --code not shown callbacks = [ OcclusionSensitivityCallback( validation_data=(x_val, y_val), class_index=0, patch_size=10, output_dir=output_dir, ) ] model.fit(x_train, y_train, batch_size=2, epochs=2, callbacks=callbacks)

Example Callback code with multiple callbacks to produce different visualizations

import numpy as np import tensorflow as tf import tf_explain INPUT_SHAPE = (28, 28, 1) NUM_CLASSES = 10 AVAILABLE_DATASETS = {

'mnist': tf.keras.datasets.mnist,

'fashion_mnist': tf.keras.datasets.fashion_mnist,

}

ATASET_NAME = 'fashion_mnist' # Choose between "mnist" and "fashion_mnist"

# Load dataset dataset = AVAILABLE_DATASETS[DATASET_NAME] (train_images, train_labels), (test_images, test_labels) = dataset.load_data() # Convert from (28, 28) images to (28, 28, 1)

train_images = train_images[..., tf.newaxis].astype('float32')

test_images = test_images[..., tf.newaxis].astype('float32')

# One hot encore labels 0, 1, .., 9 to [0, 0, .., 1, 0, 0] train_labels = tf.keras.utils.to_categorical(train_labels, num_classes=NUM_CLASSES) test_labels = tf.keras.utils.to_categorical(test_labels, num_classes=NUM_CLASSES) # Create model img_input = tf.keras.Input(INPUT_SHAPE) x = tf.keras.layers.Conv2D(filters=32, kernel_size=(3, 3), activation='relu')(img_input) x = tf.keras.layers.Conv2D(filters=64, kernel_size=(3, 3), activation='relu', name='target_layer')(x) x = tf.keras.layers.MaxPool2D(pool_size=(2, 2))(x) x = tf.keras.layers.Dropout(0.25)(x) x = tf.keras.layers.Flatten()(x) x = tf.keras.layers.Dense(128, activation='relu')(x) x = tf.keras.layers.Dropout(0.5)(x) x = tf.keras.layers.Dense(NUM_CLASSES, activation='softmax')(x) model = tf.keras.Model(img_input, x) model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy']) # Select a subset of the validation data to examine

validation_class_zero = (np.array([

el for el, label in zip(test_images, test_labels)

if np.all(np.argmax(label) == 0)

][0:5]), None)

validation_class_one = (np.array([

el for el, label in zip(test_images, test_labels)

if np.all(np.argmax(label) == 1)

][0:5]), None)

# Instantiate callbacks

# class_index value should match the validation_data selected above

callbacks = [

tf_explain.callbacks.GradCAMCallback(validation_class_zero, layer_name='target_layer', class_index=0),

tf_explain.callbacks.GradCAMCallback(validation_class_fours, layer_name='target_layer', class_index=4),

tf_explain.callbacks.ActivationsVisualizationCallback(validation_class_zero, layers_name=['target_layer']),

tf_explain.callbacks.SmoothGradCallback(validation_class_zero, class_index=0, num_samples=15, noise=1.),

tf_explain.callbacks.IntegratedGradientsCallback(validation_class_zero, class_index=0, n_steps=10),

tf_explain.callbacks.VanillaGradientsCallback(validation_class_zero, class_index=0),

]

# Start training

model.fit(train_images, train_labels, epochs=5, callbacks=callbacks)

|

FYI - MobileNet Architecture Summary from TF2 (gives names of layers

| Model: "mobilenet_1.00_224" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= input_13 (InputLayer) [(None, 224, 224, 3)] 0 _________________________________________________________________ conv1_pad (ZeroPadding2D) (None, 225, 225, 3) 0 _________________________________________________________________ conv1 (Conv2D) (None, 112, 112, 32) 864 _________________________________________________________________ conv1_bn (BatchNormalization (None, 112, 112, 32) 128 _________________________________________________________________ conv1_relu (ReLU) (None, 112, 112, 32) 0 _________________________________________________________________ conv_dw_1 (DepthwiseConv2D) (None, 112, 112, 32) 288 _________________________________________________________________ conv_dw_1_bn (BatchNormaliza (None, 112, 112, 32) 128 _________________________________________________________________ conv_dw_1_relu (ReLU) (None, 112, 112, 32) 0 _________________________________________________________________ conv_pw_1 (Conv2D) (None, 112, 112, 64) 2048 _________________________________________________________________ conv_pw_1_bn (BatchNormaliza (None, 112, 112, 64) 256 _________________________________________________________________ conv_pw_1_relu (ReLU) (None, 112, 112, 64) 0 _________________________________________________________________ conv_pad_2 (ZeroPadding2D) (None, 113, 113, 64) 0 _________________________________________________________________ conv_dw_2 (DepthwiseConv2D) (None, 56, 56, 64) 576 _________________________________________________________________ conv_dw_2_bn (BatchNormaliza (None, 56, 56, 64) 256 _________________________________________________________________ conv_dw_2_relu (ReLU) (None, 56, 56, 64) 0 _________________________________________________________________ conv_pw_2 (Conv2D) (None, 56, 56, 128) 8192 _________________________________________________________________ conv_pw_2_bn (BatchNormaliza (None, 56, 56, 128) 512 _________________________________________________________________ conv_pw_2_relu (ReLU) (None, 56, 56, 128) 0 _________________________________________________________________ conv_dw_3 (DepthwiseConv2D) (None, 56, 56, 128) 1152 _________________________________________________________________ conv_dw_3_bn (BatchNormaliza (None, 56, 56, 128) 512 _________________________________________________________________ conv_dw_3_relu (ReLU) (None, 56, 56, 128) 0 _________________________________________________________________ conv_pw_3 (Conv2D) (None, 56, 56, 128) 16384 _________________________________________________________________ conv_pw_3_bn (BatchNormaliza (None, 56, 56, 128) 512 _________________________________________________________________ conv_pw_3_relu (ReLU) (None, 56, 56, 128) 0 _________________________________________________________________ conv_pad_4 (ZeroPadding2D) (None, 57, 57, 128) 0 _________________________________________________________________ conv_dw_4 (DepthwiseConv2D) (None, 28, 28, 128) 1152 _________________________________________________________________ conv_dw_4_bn (BatchNormaliza (None, 28, 28, 128) 512 _________________________________________________________________ conv_dw_4_relu (ReLU) (None, 28, 28, 128) 0 _________________________________________________________________ conv_pw_4 (Conv2D) (None, 28, 28, 256) 32768 _________________________________________________________________ conv_pw_4_bn (BatchNormaliza (None, 28, 28, 256) 1024 _________________________________________________________________ conv_pw_4_relu (ReLU) (None, 28, 28, 256) 0 _________________________________________________________________ conv_dw_5 (DepthwiseConv2D) (None, 28, 28, 256) 2304 _________________________________________________________________ conv_dw_5_bn (BatchNormaliza (None, 28, 28, 256) 1024 _________________________________________________________________ conv_dw_5_relu (ReLU) (None, 28, 28, 256) 0 _________________________________________________________________ conv_pw_5 (Conv2D) (None, 28, 28, 256) 65536 _________________________________________________________________ conv_pw_5_bn (BatchNormaliza (None, 28, 28, 256) 1024 _________________________________________________________________ conv_pw_5_relu (ReLU) (None, 28, 28, 256) 0 _________________________________________________________________ conv_pad_6 (ZeroPadding2D) (None, 29, 29, 256) 0 _________________________________________________________________ conv_dw_6 (DepthwiseConv2D) (None, 14, 14, 256) 2304 _________________________________________________________________ conv_dw_6_bn (BatchNormaliza (None, 14, 14, 256) 1024 _________________________________________________________________ conv_dw_6_relu (ReLU) (None, 14, 14, 256) 0 _________________________________________________________________ conv_pw_6 (Conv2D) (None, 14, 14, 512) 131072 _________________________________________________________________ conv_pw_6_bn (BatchNormaliza (None, 14, 14, 512) 2048 _________________________________________________________________ conv_pw_6_relu (ReLU) (None, 14, 14, 512) 0 _________________________________________________________________ conv_dw_7 (DepthwiseConv2D) (None, 14, 14, 512) 4608 _________________________________________________________________ conv_dw_7_bn (BatchNormaliza (None, 14, 14, 512) 2048 _________________________________________________________________ conv_dw_7_relu (ReLU) (None, 14, 14, 512) 0 _________________________________________________________________ conv_pw_7 (Conv2D) (None, 14, 14, 512) 262144 _________________________________________________________________ conv_pw_7_bn (BatchNormaliza (None, 14, 14, 512) 2048 _________________________________________________________________ conv_pw_7_relu (ReLU) (None, 14, 14, 512) 0 _________________________________________________________________ conv_dw_8 (DepthwiseConv2D) (None, 14, 14, 512) 4608 _________________________________________________________________ conv_dw_8_bn (BatchNormaliza (None, 14, 14, 512) 2048 _________________________________________________________________ conv_dw_8_relu (ReLU) (None, 14, 14, 512) 0 _________________________________________________________________ conv_pw_8 (Conv2D) (None, 14, 14, 512) 262144 _________________________________________________________________ conv_pw_8_bn (BatchNormaliza (None, 14, 14, 512) 2048 _________________________________________________________________ conv_pw_8_relu (ReLU) (None, 14, 14, 512) 0 _________________________________________________________________ conv_dw_9 (DepthwiseConv2D) (None, 14, 14, 512) 4608 _________________________________________________________________ conv_dw_9_bn (BatchNormaliza (None, 14, 14, 512) 2048 _________________________________________________________________ conv_dw_9_relu (ReLU) (None, 14, 14, 512) 0 _________________________________________________________________ conv_pw_9 (Conv2D) (None, 14, 14, 512) 262144 _________________________________________________________________ conv_pw_9_bn (BatchNormaliza (None, 14, 14, 512) 2048 _________________________________________________________________ conv_pw_9_relu (ReLU) (None, 14, 14, 512) 0 _________________________________________________________________ conv_dw_10 (DepthwiseConv2D) (None, 14, 14, 512) 4608 _________________________________________________________________ conv_dw_10_bn (BatchNormaliz (None, 14, 14, 512) 2048 _________________________________________________________________ conv_dw_10_relu (ReLU) (None, 14, 14, 512) 0 _________________________________________________________________ conv_pw_10 (Conv2D) (None, 14, 14, 512) 262144 _________________________________________________________________ conv_pw_10_bn (BatchNormaliz (None, 14, 14, 512) 2048 _________________________________________________________________ conv_pw_10_relu (ReLU) (None, 14, 14, 512) 0 _________________________________________________________________ conv_dw_11 (DepthwiseConv2D) (None, 14, 14, 512) 4608 _________________________________________________________________ conv_dw_11_bn (BatchNormaliz (None, 14, 14, 512) 2048 _________________________________________________________________ conv_dw_11_relu (ReLU) (None, 14, 14, 512) 0 _________________________________________________________________ conv_pw_11 (Conv2D) (None, 14, 14, 512) 262144 _________________________________________________________________ conv_pw_11_bn (BatchNormaliz (None, 14, 14, 512) 2048 _________________________________________________________________ conv_pw_11_relu (ReLU) (None, 14, 14, 512) 0 _________________________________________________________________ conv_pad_12 (ZeroPadding2D) (None, 15, 15, 512) 0 _________________________________________________________________ conv_dw_12 (DepthwiseConv2D) (None, 7, 7, 512) 4608 _________________________________________________________________ conv_dw_12_bn (BatchNormaliz (None, 7, 7, 512) 2048 _________________________________________________________________ conv_dw_12_relu (ReLU) (None, 7, 7, 512) 0 _________________________________________________________________ conv_pw_12 (Conv2D) (None, 7, 7, 1024) 524288 _________________________________________________________________ conv_pw_12_bn (BatchNormaliz (None, 7, 7, 1024) 4096 _________________________________________________________________ conv_pw_12_relu (ReLU) (None, 7, 7, 1024) 0 _________________________________________________________________ conv_dw_13 (DepthwiseConv2D) (None, 7, 7, 1024) 9216 _________________________________________________________________ conv_dw_13_bn (BatchNormaliz (None, 7, 7, 1024) 4096 _________________________________________________________________ conv_dw_13_relu (ReLU) (None, 7, 7, 1024) 0 _________________________________________________________________ conv_pw_13 (Conv2D) (None, 7, 7, 1024) 1048576 _________________________________________________________________ conv_pw_13_bn (BatchNormaliz (None, 7, 7, 1024) 4096 _________________________________________________________________ conv_pw_13_relu (ReLU) (None, 7, 7, 1024) 0 _________________________________________________________________ global_average_pooling2d_2 ( (None, 1024) 0 _________________________________________________________________ reshape_1 (Reshape) (None, 1, 1, 1024) 0 _________________________________________________________________ dropout (Dropout) (None, 1, 1, 1024) 0 _________________________________________________________________ conv_preds (Conv2D) (None, 1, 1, 1000) 1025000 _________________________________________________________________ reshape_2 (Reshape) (None, 1000) 0 _________________________________________________________________ act_softmax (Activation) (None, 1000) 0 ================================================================= Total params: 4,253,864 Trainable params: 4,231,976 Non-trainable params: 21,888 _________________________________________________________________ |

FYI - ResNet50 Architecture Summary from TF2 (gives names of layers)

Model: "resnet50"__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_14 (InputLayer) [(None, 224, 224, 3) 0

__________________________________________________________________________________________________

conv1_pad (ZeroPadding2D) (None, 230, 230, 3) 0 input_14[0][0]

__________________________________________________________________________________________________

conv1_conv (Conv2D) (None, 112, 112, 64) 9472 conv1_pad[0][0]

__________________________________________________________________________________________________

conv1_bn (BatchNormalization) (None, 112, 112, 64) 256 conv1_conv[0][0]

__________________________________________________________________________________________________

conv1_relu (Activation) (None, 112, 112, 64) 0 conv1_bn[0][0]

__________________________________________________________________________________________________

pool1_pad (ZeroPadding2D) (None, 114, 114, 64) 0 conv1_relu[0][0]

__________________________________________________________________________________________________

pool1_pool (MaxPooling2D) (None, 56, 56, 64) 0 pool1_pad[0][0]

__________________________________________________________________________________________________

conv2_block1_1_conv (Conv2D) (None, 56, 56, 64) 4160 pool1_pool[0][0]

__________________________________________________________________________________________________

conv2_block1_1_bn (BatchNormali (None, 56, 56, 64) 256 conv2_block1_1_conv[0][0]

__________________________________________________________________________________________________

conv2_block1_1_relu (Activation (None, 56, 56, 64) 0 conv2_block1_1_bn[0][0]

__________________________________________________________________________________________________

conv2_block1_2_conv (Conv2D) (None, 56, 56, 64) 36928 conv2_block1_1_relu[0][0]

__________________________________________________________________________________________________

conv2_block1_2_bn (BatchNormali (None, 56, 56, 64) 256 conv2_block1_2_conv[0][0]

__________________________________________________________________________________________________

conv2_block1_2_relu (Activation (None, 56, 56, 64) 0 conv2_block1_2_bn[0][0]

__________________________________________________________________________________________________

conv2_block1_0_conv (Conv2D) (None, 56, 56, 256) 16640 pool1_pool[0][0]

__________________________________________________________________________________________________

conv2_block1_3_conv (Conv2D) (None, 56, 56, 256) 16640 conv2_block1_2_relu[0][0]

__________________________________________________________________________________________________

conv2_block1_0_bn (BatchNormali (None, 56, 56, 256) 1024 conv2_block1_0_conv[0][0]

__________________________________________________________________________________________________

conv2_block1_3_bn (BatchNormali (None, 56, 56, 256) 1024 conv2_block1_3_conv[0][0]

__________________________________________________________________________________________________

conv2_block1_add (Add) (None, 56, 56, 256) 0 conv2_block1_0_bn[0][0]

conv2_block1_3_bn[0][0]

__________________________________________________________________________________________________

conv2_block1_out (Activation) (None, 56, 56, 256) 0 conv2_block1_add[0][0]

__________________________________________________________________________________________________

conv2_block2_1_conv (Conv2D) (None, 56, 56, 64) 16448 conv2_block1_out[0][0]

__________________________________________________________________________________________________

conv2_block2_1_bn (BatchNormali (None, 56, 56, 64) 256 conv2_block2_1_conv[0][0]

__________________________________________________________________________________________________

conv2_block2_1_relu (Activation (None, 56, 56, 64) 0 conv2_block2_1_bn[0][0]

__________________________________________________________________________________________________

conv2_block2_2_conv (Conv2D) (None, 56, 56, 64) 36928 conv2_block2_1_relu[0][0]

__________________________________________________________________________________________________

conv2_block2_2_bn (BatchNormali (None, 56, 56, 64) 256 conv2_block2_2_conv[0][0]

__________________________________________________________________________________________________

conv2_block2_2_relu (Activation (None, 56, 56, 64) 0 conv2_block2_2_bn[0][0]

__________________________________________________________________________________________________

conv2_block2_3_conv (Conv2D) (None, 56, 56, 256) 16640 conv2_block2_2_relu[0][0]

__________________________________________________________________________________________________

conv2_block2_3_bn (BatchNormali (None, 56, 56, 256) 1024 conv2_block2_3_conv[0][0]

__________________________________________________________________________________________________

conv2_block2_add (Add) (None, 56, 56, 256) 0 conv2_block1_out[0][0]

conv2_block2_3_bn[0][0]

__________________________________________________________________________________________________

conv2_block2_out (Activation) (None, 56, 56, 256) 0 conv2_block2_add[0][0]

__________________________________________________________________________________________________

conv2_block3_1_conv (Conv2D) (None, 56, 56, 64) 16448 conv2_block2_out[0][0]

__________________________________________________________________________________________________

conv2_block3_1_bn (BatchNormali (None, 56, 56, 64) 256 conv2_block3_1_conv[0][0]

__________________________________________________________________________________________________

conv2_block3_1_relu (Activation (None, 56, 56, 64) 0 conv2_block3_1_bn[0][0]

__________________________________________________________________________________________________

conv2_block3_2_conv (Conv2D) (None, 56, 56, 64) 36928 conv2_block3_1_relu[0][0]

__________________________________________________________________________________________________

conv2_block3_2_bn (BatchNormali (None, 56, 56, 64) 256 conv2_block3_2_conv[0][0]

__________________________________________________________________________________________________

conv2_block3_2_relu (Activation (None, 56, 56, 64) 0 conv2_block3_2_bn[0][0]

__________________________________________________________________________________________________

conv2_block3_3_conv (Conv2D) (None, 56, 56, 256) 16640 conv2_block3_2_relu[0][0]

__________________________________________________________________________________________________

conv2_block3_3_bn (BatchNormali (None, 56, 56, 256) 1024 conv2_block3_3_conv[0][0]

__________________________________________________________________________________________________

conv2_block3_add (Add) (None, 56, 56, 256) 0 conv2_block2_out[0][0]

conv2_block3_3_bn[0][0]

__________________________________________________________________________________________________

conv2_block3_out (Activation) (None, 56, 56, 256) 0 conv2_block3_add[0][0]

__________________________________________________________________________________________________

conv3_block1_1_conv (Conv2D) (None, 28, 28, 128) 32896 conv2_block3_out[0][0]

__________________________________________________________________________________________________

conv3_block1_1_bn (BatchNormali (None, 28, 28, 128) 512 conv3_block1_1_conv[0][0]

__________________________________________________________________________________________________

conv3_block1_1_relu (Activation (None, 28, 28, 128) 0 conv3_block1_1_bn[0][0]

__________________________________________________________________________________________________

conv3_block1_2_conv (Conv2D) (None, 28, 28, 128) 147584 conv3_block1_1_relu[0][0]

__________________________________________________________________________________________________

conv3_block1_2_bn (BatchNormali (None, 28, 28, 128) 512 conv3_block1_2_conv[0][0]

__________________________________________________________________________________________________

conv3_block1_2_relu (Activation (None, 28, 28, 128) 0 conv3_block1_2_bn[0][0]

__________________________________________________________________________________________________

conv3_block1_0_conv (Conv2D) (None, 28, 28, 512) 131584 conv2_block3_out[0][0]

__________________________________________________________________________________________________

conv3_block1_3_conv (Conv2D) (None, 28, 28, 512) 66048 conv3_block1_2_relu[0][0]

__________________________________________________________________________________________________

conv3_block1_0_bn (BatchNormali (None, 28, 28, 512) 2048 conv3_block1_0_conv[0][0]

__________________________________________________________________________________________________

conv3_block1_3_bn (BatchNormali (None, 28, 28, 512) 2048 conv3_block1_3_conv[0][0]

__________________________________________________________________________________________________

conv3_block1_add (Add) (None, 28, 28, 512) 0 conv3_block1_0_bn[0][0]

conv3_block1_3_bn[0][0]

__________________________________________________________________________________________________

conv3_block1_out (Activation) (None, 28, 28, 512) 0 conv3_block1_add[0][0]

__________________________________________________________________________________________________

conv3_block2_1_conv (Conv2D) (None, 28, 28, 128) 65664 conv3_block1_out[0][0]

__________________________________________________________________________________________________

conv3_block2_1_bn (BatchNormali (None, 28, 28, 128) 512 conv3_block2_1_conv[0][0]

__________________________________________________________________________________________________

conv3_block2_1_relu (Activation (None, 28, 28, 128) 0 conv3_block2_1_bn[0][0]

__________________________________________________________________________________________________

conv3_block2_2_conv (Conv2D) (None, 28, 28, 128) 147584 conv3_block2_1_relu[0][0]

__________________________________________________________________________________________________

conv3_block2_2_bn (BatchNormali (None, 28, 28, 128) 512 conv3_block2_2_conv[0][0]

__________________________________________________________________________________________________

conv3_block2_2_relu (Activation (None, 28, 28, 128) 0 conv3_block2_2_bn[0][0]

__________________________________________________________________________________________________

conv3_block2_3_conv (Conv2D) (None, 28, 28, 512) 66048 conv3_block2_2_relu[0][0]

__________________________________________________________________________________________________

conv3_block2_3_bn (BatchNormali (None, 28, 28, 512) 2048 conv3_block2_3_conv[0][0]

__________________________________________________________________________________________________

conv3_block2_add (Add) (None, 28, 28, 512) 0 conv3_block1_out[0][0]

conv3_block2_3_bn[0][0]

__________________________________________________________________________________________________

conv3_block2_out (Activation) (None, 28, 28, 512) 0 conv3_block2_add[0][0]

__________________________________________________________________________________________________

conv3_block3_1_conv (Conv2D) (None, 28, 28, 128) 65664 conv3_block2_out[0][0]

__________________________________________________________________________________________________

conv3_block3_1_bn (BatchNormali (None, 28, 28, 128) 512 conv3_block3_1_conv[0][0]

__________________________________________________________________________________________________

conv3_block3_1_relu (Activation (None, 28, 28, 128) 0 conv3_block3_1_bn[0][0]

__________________________________________________________________________________________________

conv3_block3_2_conv (Conv2D) (None, 28, 28, 128) 147584 conv3_block3_1_relu[0][0]

__________________________________________________________________________________________________

conv3_block3_2_bn (BatchNormali (None, 28, 28, 128) 512 conv3_block3_2_conv[0][0]

__________________________________________________________________________________________________

conv3_block3_2_relu (Activation (None, 28, 28, 128) 0 conv3_block3_2_bn[0][0]

__________________________________________________________________________________________________

conv3_block3_3_conv (Conv2D) (None, 28, 28, 512) 66048 conv3_block3_2_relu[0][0]

__________________________________________________________________________________________________

conv3_block3_3_bn (BatchNormali (None, 28, 28, 512) 2048 conv3_block3_3_conv[0][0]

__________________________________________________________________________________________________

conv3_block3_add (Add) (None, 28, 28, 512) 0 conv3_block2_out[0][0]

conv3_block3_3_bn[0][0]

__________________________________________________________________________________________________

conv3_block3_out (Activation) (None, 28, 28, 512) 0 conv3_block3_add[0][0]

__________________________________________________________________________________________________

conv3_block4_1_conv (Conv2D) (None, 28, 28, 128) 65664 conv3_block3_out[0][0]

__________________________________________________________________________________________________

conv3_block4_1_bn (BatchNormali (None, 28, 28, 128) 512 conv3_block4_1_conv[0][0]

__________________________________________________________________________________________________

conv3_block4_1_relu (Activation (None, 28, 28, 128) 0 conv3_block4_1_bn[0][0]

__________________________________________________________________________________________________

conv3_block4_2_conv (Conv2D) (None, 28, 28, 128) 147584 conv3_block4_1_relu[0][0]

__________________________________________________________________________________________________

conv3_block4_2_bn (BatchNormali (None, 28, 28, 128) 512 conv3_block4_2_conv[0][0]

__________________________________________________________________________________________________

conv3_block4_2_relu (Activation (None, 28, 28, 128) 0 conv3_block4_2_bn[0][0]

__________________________________________________________________________________________________

conv3_block4_3_conv (Conv2D) (None, 28, 28, 512) 66048 conv3_block4_2_relu[0][0]

__________________________________________________________________________________________________

conv3_block4_3_bn (BatchNormali (None, 28, 28, 512) 2048 conv3_block4_3_conv[0][0]

__________________________________________________________________________________________________

conv3_block4_add (Add) (None, 28, 28, 512) 0 conv3_block3_out[0][0]

conv3_block4_3_bn[0][0]

__________________________________________________________________________________________________

conv3_block4_out (Activation) (None, 28, 28, 512) 0 conv3_block4_add[0][0]

__________________________________________________________________________________________________

conv4_block1_1_conv (Conv2D) (None, 14, 14, 256) 131328 conv3_block4_out[0][0]

__________________________________________________________________________________________________

conv4_block1_1_bn (BatchNormali (None, 14, 14, 256) 1024 conv4_block1_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block1_1_relu (Activation (None, 14, 14, 256) 0 conv4_block1_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block1_2_conv (Conv2D) (None, 14, 14, 256) 590080 conv4_block1_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block1_2_bn (BatchNormali (None, 14, 14, 256) 1024 conv4_block1_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block1_2_relu (Activation (None, 14, 14, 256) 0 conv4_block1_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block1_0_conv (Conv2D) (None, 14, 14, 1024) 525312 conv3_block4_out[0][0]

__________________________________________________________________________________________________

conv4_block1_3_conv (Conv2D) (None, 14, 14, 1024) 263168 conv4_block1_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block1_0_bn (BatchNormali (None, 14, 14, 1024) 4096 conv4_block1_0_conv[0][0]

__________________________________________________________________________________________________

conv4_block1_3_bn (BatchNormali (None, 14, 14, 1024) 4096 conv4_block1_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block1_add (Add) (None, 14, 14, 1024) 0 conv4_block1_0_bn[0][0]

conv4_block1_3_bn[0][0]

__________________________________________________________________________________________________

conv4_block1_out (Activation) (None, 14, 14, 1024) 0 conv4_block1_add[0][0]

__________________________________________________________________________________________________

conv4_block2_1_conv (Conv2D) (None, 14, 14, 256) 262400 conv4_block1_out[0][0]

__________________________________________________________________________________________________

conv4_block2_1_bn (BatchNormali (None, 14, 14, 256) 1024 conv4_block2_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block2_1_relu (Activation (None, 14, 14, 256) 0 conv4_block2_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block2_2_conv (Conv2D) (None, 14, 14, 256) 590080 conv4_block2_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block2_2_bn (BatchNormali (None, 14, 14, 256) 1024 conv4_block2_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block2_2_relu (Activation (None, 14, 14, 256) 0 conv4_block2_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block2_3_conv (Conv2D) (None, 14, 14, 1024) 263168 conv4_block2_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block2_3_bn (BatchNormali (None, 14, 14, 1024) 4096 conv4_block2_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block2_add (Add) (None, 14, 14, 1024) 0 conv4_block1_out[0][0]

conv4_block2_3_bn[0][0]

__________________________________________________________________________________________________

conv4_block2_out (Activation) (None, 14, 14, 1024) 0 conv4_block2_add[0][0]

__________________________________________________________________________________________________

conv4_block3_1_conv (Conv2D) (None, 14, 14, 256) 262400 conv4_block2_out[0][0]

__________________________________________________________________________________________________

conv4_block3_1_bn (BatchNormali (None, 14, 14, 256) 1024 conv4_block3_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block3_1_relu (Activation (None, 14, 14, 256) 0 conv4_block3_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block3_2_conv (Conv2D) (None, 14, 14, 256) 590080 conv4_block3_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block3_2_bn (BatchNormali (None, 14, 14, 256) 1024 conv4_block3_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block3_2_relu (Activation (None, 14, 14, 256) 0 conv4_block3_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block3_3_conv (Conv2D) (None, 14, 14, 1024) 263168 conv4_block3_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block3_3_bn (BatchNormali (None, 14, 14, 1024) 4096 conv4_block3_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block3_add (Add) (None, 14, 14, 1024) 0 conv4_block2_out[0][0]

conv4_block3_3_bn[0][0]

__________________________________________________________________________________________________

conv4_block3_out (Activation) (None, 14, 14, 1024) 0 conv4_block3_add[0][0]

__________________________________________________________________________________________________

conv4_block4_1_conv (Conv2D) (None, 14, 14, 256) 262400 conv4_block3_out[0][0]

__________________________________________________________________________________________________

conv4_block4_1_bn (BatchNormali (None, 14, 14, 256) 1024 conv4_block4_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block4_1_relu (Activation (None, 14, 14, 256) 0 conv4_block4_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block4_2_conv (Conv2D) (None, 14, 14, 256) 590080 conv4_block4_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block4_2_bn (BatchNormali (None, 14, 14, 256) 1024 conv4_block4_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block4_2_relu (Activation (None, 14, 14, 256) 0 conv4_block4_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block4_3_conv (Conv2D) (None, 14, 14, 1024) 263168 conv4_block4_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block4_3_bn (BatchNormali (None, 14, 14, 1024) 4096 conv4_block4_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block4_add (Add) (None, 14, 14, 1024) 0 conv4_block3_out[0][0]

conv4_block4_3_bn[0][0]

__________________________________________________________________________________________________

conv4_block4_out (Activation) (None, 14, 14, 1024) 0 conv4_block4_add[0][0]

__________________________________________________________________________________________________

conv4_block5_1_conv (Conv2D) (None, 14, 14, 256) 262400 conv4_block4_out[0][0]

__________________________________________________________________________________________________

conv4_block5_1_bn (BatchNormali (None, 14, 14, 256) 1024 conv4_block5_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block5_1_relu (Activation (None, 14, 14, 256) 0 conv4_block5_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block5_2_conv (Conv2D) (None, 14, 14, 256) 590080 conv4_block5_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block5_2_bn (BatchNormali (None, 14, 14, 256) 1024 conv4_block5_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block5_2_relu (Activation (None, 14, 14, 256) 0 conv4_block5_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block5_3_conv (Conv2D) (None, 14, 14, 1024) 263168 conv4_block5_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block5_3_bn (BatchNormali (None, 14, 14, 1024) 4096 conv4_block5_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block5_add (Add) (None, 14, 14, 1024) 0 conv4_block4_out[0][0]

conv4_block5_3_bn[0][0]

__________________________________________________________________________________________________

conv4_block5_out (Activation) (None, 14, 14, 1024) 0 conv4_block5_add[0][0]

__________________________________________________________________________________________________

conv4_block6_1_conv (Conv2D) (None, 14, 14, 256) 262400 conv4_block5_out[0][0]

__________________________________________________________________________________________________

conv4_block6_1_bn (BatchNormali (None, 14, 14, 256) 1024 conv4_block6_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block6_1_relu (Activation (None, 14, 14, 256) 0 conv4_block6_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block6_2_conv (Conv2D) (None, 14, 14, 256) 590080 conv4_block6_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block6_2_bn (BatchNormali (None, 14, 14, 256) 1024 conv4_block6_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block6_2_relu (Activation (None, 14, 14, 256) 0 conv4_block6_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block6_3_conv (Conv2D) (None, 14, 14, 1024) 263168 conv4_block6_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block6_3_bn (BatchNormali (None, 14, 14, 1024) 4096 conv4_block6_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block6_add (Add) (None, 14, 14, 1024) 0 conv4_block5_out[0][0]

conv4_block6_3_bn[0][0]

__________________________________________________________________________________________________

conv4_block6_out (Activation) (None, 14, 14, 1024) 0 conv4_block6_add[0][0]

__________________________________________________________________________________________________

conv5_block1_1_conv (Conv2D) (None, 7, 7, 512) 524800 conv4_block6_out[0][0]

__________________________________________________________________________________________________

conv5_block1_1_bn (BatchNormali (None, 7, 7, 512) 2048 conv5_block1_1_conv[0][0]

__________________________________________________________________________________________________

conv5_block1_1_relu (Activation (None, 7, 7, 512) 0 conv5_block1_1_bn[0][0]

__________________________________________________________________________________________________

conv5_block1_2_conv (Conv2D) (None, 7, 7, 512) 2359808 conv5_block1_1_relu[0][0]

__________________________________________________________________________________________________

conv5_block1_2_bn (BatchNormali (None, 7, 7, 512) 2048 conv5_block1_2_conv[0][0]

__________________________________________________________________________________________________

conv5_block1_2_relu (Activation (None, 7, 7, 512) 0 conv5_block1_2_bn[0][0]

__________________________________________________________________________________________________

conv5_block1_0_conv (Conv2D) (None, 7, 7, 2048) 2099200 conv4_block6_out[0][0]

__________________________________________________________________________________________________

conv5_block1_3_conv (Conv2D) (None, 7, 7, 2048) 1050624 conv5_block1_2_relu[0][0]

__________________________________________________________________________________________________

conv5_block1_0_bn (BatchNormali (None, 7, 7, 2048) 8192 conv5_block1_0_conv[0][0]

__________________________________________________________________________________________________

conv5_block1_3_bn (BatchNormali (None, 7, 7, 2048) 8192 conv5_block1_3_conv[0][0]

__________________________________________________________________________________________________

conv5_block1_add (Add) (None, 7, 7, 2048) 0 conv5_block1_0_bn[0][0]

conv5_block1_3_bn[0][0]

__________________________________________________________________________________________________

conv5_block1_out (Activation) (None, 7, 7, 2048) 0 conv5_block1_add[0][0]

__________________________________________________________________________________________________

conv5_block2_1_conv (Conv2D) (None, 7, 7, 512) 1049088 conv5_block1_out[0][0]

__________________________________________________________________________________________________

conv5_block2_1_bn (BatchNormali (None, 7, 7, 512) 2048 conv5_block2_1_conv[0][0]

__________________________________________________________________________________________________

conv5_block2_1_relu (Activation (None, 7, 7, 512) 0 conv5_block2_1_bn[0][0]

__________________________________________________________________________________________________

conv5_block2_2_conv (Conv2D) (None, 7, 7, 512) 2359808 conv5_block2_1_relu[0][0]

__________________________________________________________________________________________________

conv5_block2_2_bn (BatchNormali (None, 7, 7, 512) 2048 conv5_block2_2_conv[0][0]

__________________________________________________________________________________________________

conv5_block2_2_relu (Activation (None, 7, 7, 512) 0 conv5_block2_2_bn[0][0]

__________________________________________________________________________________________________

conv5_block2_3_conv (Conv2D) (None, 7, 7, 2048) 1050624 conv5_block2_2_relu[0][0]

__________________________________________________________________________________________________

conv5_block2_3_bn (BatchNormali (None, 7, 7, 2048) 8192 conv5_block2_3_conv[0][0]

__________________________________________________________________________________________________

conv5_block2_add (Add) (None, 7, 7, 2048) 0 conv5_block1_out[0][0]

conv5_block2_3_bn[0][0]

__________________________________________________________________________________________________

conv5_block2_out (Activation) (None, 7, 7, 2048) 0 conv5_block2_add[0][0]

__________________________________________________________________________________________________

conv5_block3_1_conv (Conv2D) (None, 7, 7, 512) 1049088 conv5_block2_out[0][0]

__________________________________________________________________________________________________

conv5_block3_1_bn (BatchNormali (None, 7, 7, 512) 2048 conv5_block3_1_conv[0][0]

__________________________________________________________________________________________________

conv5_block3_1_relu (Activation (None, 7, 7, 512) 0 conv5_block3_1_bn[0][0]

__________________________________________________________________________________________________

conv5_block3_2_conv (Conv2D) (None, 7, 7, 512) 2359808 conv5_block3_1_relu[0][0]

__________________________________________________________________________________________________

conv5_block3_2_bn (BatchNormali (None, 7, 7, 512) 2048 conv5_block3_2_conv[0][0]

__________________________________________________________________________________________________

conv5_block3_2_relu (Activation (None, 7, 7, 512) 0 conv5_block3_2_bn[0][0]

__________________________________________________________________________________________________

conv5_block3_3_conv (Conv2D) (None, 7, 7, 2048) 1050624 conv5_block3_2_relu[0][0]

__________________________________________________________________________________________________

conv5_block3_3_bn (BatchNormali (None, 7, 7, 2048) 8192 conv5_block3_3_conv[0][0]

__________________________________________________________________________________________________

conv5_block3_add (Add) (None, 7, 7, 2048) 0 conv5_block2_out[0][0]

conv5_block3_3_bn[0][0]

__________________________________________________________________________________________________

conv5_block3_out (Activation) (None, 7, 7, 2048) 0 conv5_block3_add[0][0]

__________________________________________________________________________________________________

avg_pool (GlobalAveragePooling2 (None, 2048) 0 conv5_block3_out[0][0]

__________________________________________________________________________________________________

probs (Dense) (None, 1000) 2049000 avg_pool[0][0]

==================================================================================================

Total params: 25,636,712

Trainable params: 25,583,592

Non-trainable params: 53,120

__________________________________________________________________________________________________

[14]