Tensorflow Lite Setup --EXAMPLE & ISSUES from iDrone (follow tensorflow.org site for current)

WHY Tensorflow lite over normal Tensorflow?

SMALL size of model file AND Tensorflow Lite uses Hardware Acceleration from Android Neural Network (IF you have android sdk 8.1) TensorFlow Lite is TensorFlow’s lightweight solution for mobile and embedded devices. It enables on-device machine learning inference with low latency and a small binary size. TensorFlow Lite also supports hardware acceleration with the Android Neural Networks API. |

WHY NOT Tensorflow lite over normal Tensorflow?

New and Documentation NON existent --only a demo app and this demo app does only identification and NOT localization --can’t figure out YET how to do localization with the tflite Interpreter class or? NOTE: TensorFlow Lite is available as a developer preview MAY BE NO SUPPORT FOR LOCALIZTION YET Dec. 2017 posting https://github.com/tensorflow/tensorflow/issues/15633

|

DEMO CODE = Follow the directions at https://www.tensorflow.org/lite/guide/android to setup the demo code.

-

NEED = android ndk installed and setup in Android Studio File->Project Structure->sdk (and see if ndk is setup there)

-

READ HERE FOR ANDROID SDK and NDK versions needed - https://github.com/tensorflow/tensorflow/tree/master/tensorflow/contrib/lite (on April 2018 it was SDK > 26 and NDK > 14 yet on here https://www.tensorflow.org/mobile/tflite/demo_android it says android version 5 = sdk 22. Which one???? In sample apk you can download it uses SDK 26. However, the manifest from the code says minSDKVersion=21.

-

TRIED on 7.0 Asus and the sample compiled and run.

-

TRIED on x86 emulator and would NOT work. I tried to add support for x86 for emulator using 2 options shown in http://www.omnibuscode.com/board/board_tools/37435 but, did NOT work.

FILE FORMAT= tflite for models START OF THIS ****DO NOT COMPLETELY UNDERSTAND ALL THE INPUT PARAMETERS******

Read the section on Freeze Graph at https://github.com/tensorflow/tensorflow/tree/master/tensorflow/contrib/lite and notice the steps are

STEP 1: freeze the graph (input .pb file and output .pb file)

The concept of freezingFreezing graph means that you can remove the all the variables from the TensorFlow graph and convert it into the constants.TensorFlow has the weights and biases so the parameters inside neural networks as a variable because you want to train the model you want to train the neural network in its training data but once you have finish training you don’t have to those parameters in the variable you can put everything into constant.So that by converting from variables to constants you can get much faster learning time. |

The user has to input the .pb and the .ckpt files

Definitions:

GraphDef (.pb) - a protobuf that represents the TensorFlow training and or computation graph. This contains operators, tensors, and variables definitions.

-

CheckPoint (.ckpt) - Serialized variables from a TensorFlow graph. Note, this does not contain the graph structure, so alone it cannot typically be interpreted.

-

FrozenGraphDef - a subclass of GraphDef that contains no variables. A GraphDef can be converted to a frozen graphdef by taking a checkpoint and a graphdef and converting every variable into a constant with the value looked up in the checkpoint.

-

SavedModel - A collection of GraphDef and CheckPoint together with a signature that labels input and output arguments to a model. A GraphDef and Checkpoint can be extracted from a saved model.

-

TensorFlow lite model (.tflite) - a serialized flatbuffer, containing TensorFlow lite operators and Tensors for the TensorFlow lite interpreter. This is most analogous to TensorFlow frozen GraphDefs.

bazel build tensorflow/python/tools:freeze_graph NOTE: The output_node_names may not be obvious outside of the code that built the model. The easiest way to find them is to visualize the graph, either with graphviz, or in tensorboard. |

STEP 2: convert frozen .pb file to .tflite (a special formatted file that is “better” for mobile quicker access)

bazel build tensorflow/contrib/lite/toco:toco NOTE: setting the input_array, output_array and input_shape arguments are a bit trickier. The easiest way to find these values is to explore the graph in tensorboard . The user should reuse the arguments that were used for specifying the output nodes for inference in the freeze_graphstep. I think the input_shapes =?,width_inputImage, height_inputImage, ? |

Reason to use SDK 8.1 if can on Asus phone:

Supports Android Neural Network API that will speed up operations on the phone for networks by using the phone’s GPU/hardware acceleration

OPTION 1 (modify Tensorflow lite program) ONCE you have Trained your model and converted to .tflite file do the following:

-

Use the demo app as your base code (add the UVCCamera library and OpenCV to it)

-

This demo code has the Camera2BasicFragment that currently tries to make an instance of the ImageClassifierQuantizedMobileNet

classifier = new ImageClassifierQuantizedMobileNet(getActivity());

-

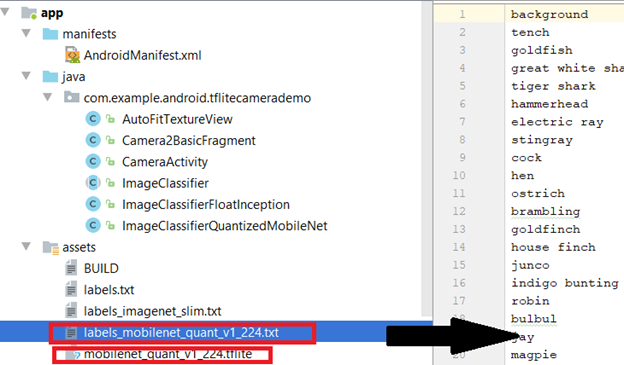

While you can change between this and an Inception model instance (need to alter the Camera2BasicFragment line in #2) you need to copy your trained network info over --specifically copy the *.tflite AND a *labels.txt file to the assets directory of the app. The *labels.txt file is the classes (in order of output vector) used in training. Node code is expecting the file for the model to be in mobilenet_quant_v1_224.tflite and the labels in labels_mobilenet_quant_v1_224.txt

-

Alter to take OpenCV frames rather than what currently have and give to stereo processing to get depth (if using this for user detection cnn) and then resize as approprite for fixed size CNN.

OPTION 2 (add Tensorflow lite program to your existing image capture app using UVCCamera code and OpenCV) ONCE you have Trained your model and converted to .tflite file do the following:

-

Use your existing app that captures and processes stereo using the UVCCamera library and OpenCV to it

-

NOW integrate parts of the TensorFlow Lite code such as inside Camera2BasicFragment that currently tries to make an instance of the ImageClassifierQuantizedMobileNet

classifier = new ImageClassifierQuantizedMobileNet(getActivity());

YOU will need to add this to your start of your apps MainActivity.

-

Add your trained Network files to assets:

While you can change between this and an Inception model instance (need to alter the Camera2BasicFragment line in #2) you need to copy your trained network info over --specifically copy the *.tflite AND a *labels.txt file to the assets directory of the app. The *labels.txt file is the classes (in order of output vector) used in training. Node code is expecting the file for the model to be in mobilenet_quant_v1_224.tflite and the labels in labels_mobilenet_quant_v1_224.txt -

NOW in the code you get your new image frame in and AFTER any stereo processing is done you need to

-

Resize the input (rgb or depth) to input size needed by CNN

-

Call the classifyFrame method that I copied from the Camera2BasicsFragment class of the TensorFlow Lite demo app, where bitmap should be CHANGED below and passed as an input to classifyFrame. NOTE the output is very specific and is a formatted String showing most likely top 3 object recognized. THIS WILL NEED to change. You are going to have to get output as top detected user, ROI bounding box AND label user_left, user_right, user_front, user_back (whatever the object labels are). Need to figure out the ImageClassifierQuantizedMobileNet and how to adjust it for region detection and not just identification....have not yet looked at this.

private void classifyFrame(Bitmap bitmap) {

if (classifier == null || getActivity() == null || cameraDevice == null) {

showToast("Uninitialized Classifier or invalid context.");

return;

}

classifier.getImageSizeY());

String textToShow = classifier.classifyFrame(bitmap);

bitmap.recycle();

showToast(textToShow);

}

NOTE the ImageClassifierQuantizedMobileNet has the following method that processes the frame on CNN using the tflite object and this is what stipulates it is only returning probabilities of each object class in an array and NOT localization.

protected void runInference() {

tflite.run(imgData, labelProbArray);

}

And tflite is an instance of org.tensorflow.lite.Interpreter

tflite = new Interpreter(loadModelFile(activity));

Special Note on Quantized Frozen model versus Float --NOT SURE if still true

|

The ImageClassifier.java included with Tensorflow-Lite Android demo expects a quantized model. As of right now, only one of the Mobilenets models is provided in quantized form: Mobilenet 1.0 224 Quant. To use the other float models, swap in the ImageClassifier.java from the Tensorflow for Poets TF-Lite demo source. This is written for float models. https://github.com/googlecodelabs/tensorflow-for-poets-2/blob/master/android/tflite/app/src/main/java/com/example/android/tflitecamerademo/ImageClassifier.java Do a diff and you'll see there are several important differences in implementation. Another option to consider is converting the float models to quantized using TOCO:https://github.com/tensorflow/tensorflow/blob/master/tensorflow/contrib/lite/toco/g3doc/cmdline_examples.md |

ALSO see https://stackoverflow.com/questions/47761514/tensorflow-lite-pretrained-model-does-not-work-in-android-demo for a new code ImageClassifier.java class that someone created that doesnt need quantized models |

IMPORTANT - tensorflow lite and SSD support....there is someone who is trying to add this to repository for demo --this would include some code that would then not only give probabilities of each class but, DO SO with Region information given = https://github.com/tensorflow/tensorflow/issues/14731

See https://github.com/andrewharp/tensorflow/tree/master/tensorflow/contrib/lite/java/demo/app/src/main/java/com/example/android/tflitecamerademo for maybe their added code???