Cloned Code for TensorFlow Android

See https://www.tensorflow.org/mobile/ for latest details and more specifically https://www.tensorflow.org/mobile/android_build

How to Clone a current example

STEP 1: install from github the tensorflow code

git clone https://github.com/tensorflow/tensorflow

now you will get the Android example in following directory

Step 2: Open the project

File->Open Project Select directory shown above NOW you may have to correct any issues with gradle file updates being needed.

Step 3: Edit the build.gradle file

Open the build.gradle file (you can go to 1:Project in the side panel and find it under the Gradle Scripts zippy under Android). Look for the nativeBuildSystem variable and set it to none if it isn't already:

// set to 'bazel', 'cmake', 'makefile', 'none'

def nativeBuildSystem = 'none'

Step 4: Run the code

Click Run button (the green arrow) or use Run -> Run 'android' from the top menu.

If it asks you to use Instant Run, click Proceed Without Instant Run.

Also, you need to have an Android device plugged in with developer options enabled at this point. See here for more details on setting up developer devices.

This cloned code contains multiple launcable activities-

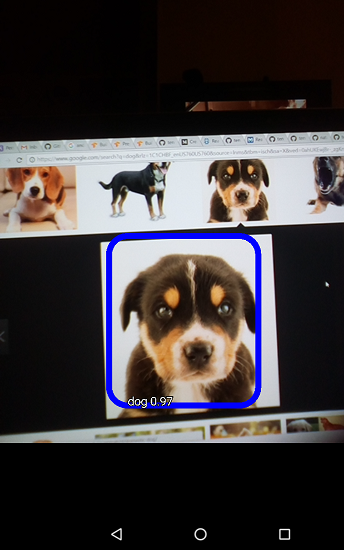

Here is the result of running the TF Detection (recognition AND localization)

--> it is trained on ImageNet (1000 objects)

NOTE: Object tracking is currently NOT supported in the Java/Android example that is the TFDetect activity based application -- so it is only still object identification and localiztion but, NOT tracking --see README file in the cloned code that says "object tracking is not available in the "TF Detect" activity. "

NOTE: Object tracking is currently NOT supported in the Java/Android example that is the TFDetect activity based application -- so it is only still object identification and localiztion but, NOT tracking --see README file in the cloned code that says "object tracking is not available in the "TF Detect" activity. "

Explaining the Code and Project Setup

-

TensorFlow is written in C++.

-

In order to build for android, we have to use JNI(Java Native Interface) to call the c++ functions like loadModel, getPredictions, etc.

Android Inference Library

Because Android apps need to be written in Java, and core TensorFlow is in C++, TensorFlow has a JNI library to interface between the two. Its interface is aimed only at inference, so it provides the ability to load a graph, set up inputs, and run the model to calculate particular outputs. You can see the full documentation for the minimal set of methods inTensorFlowInferenceInterface.java

The Manifest File (AndroidManifest.xml)

<?xml version="1.0" encoding="UTF-8"?> |

<<ASK permission to use Camera as well as

<< there are 4 activities defined as launcher so we have 4 apps associated with them that get placed in our device

<< ClassifierActivity that will simply label the objects found (identity only)

<<DetectorActivity that will both identify and locate object found

<< Actity to produce special effects on image

<<Activity to perform Speech Processing |

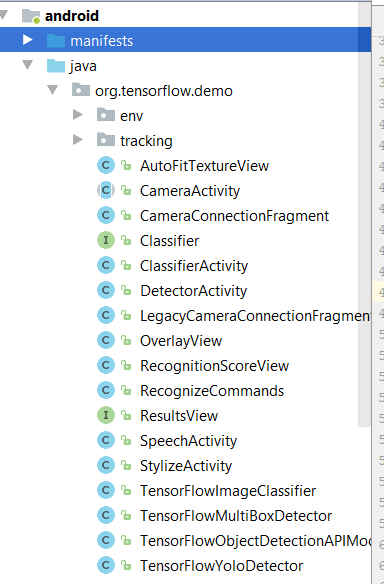

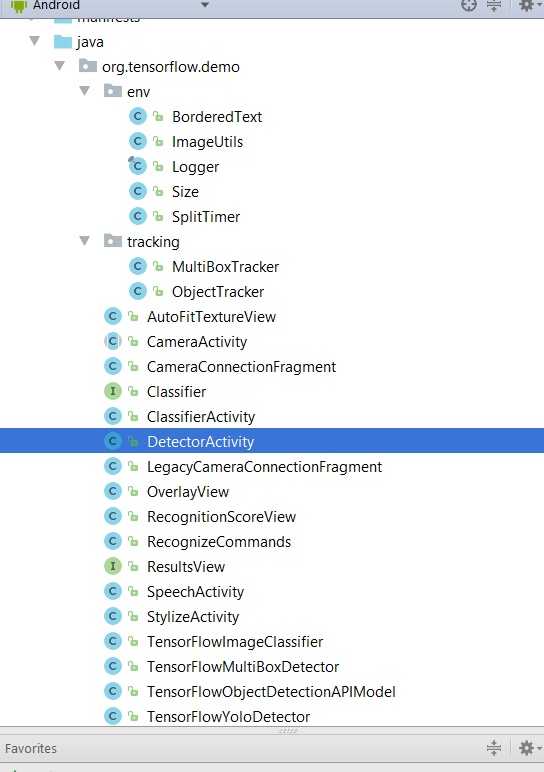

The Source Code directory contents

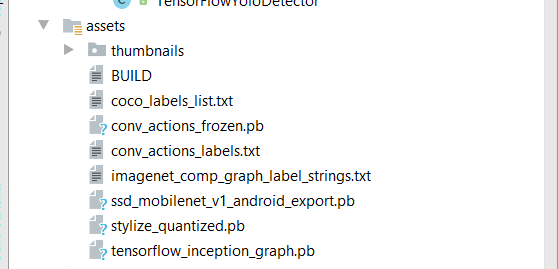

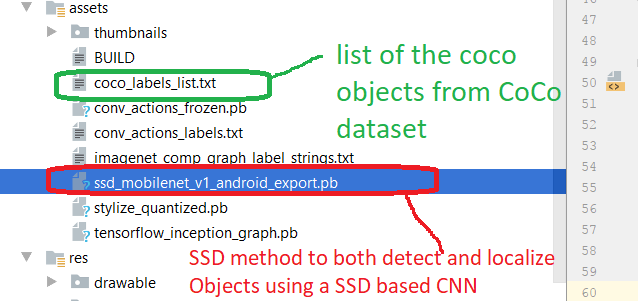

The assets directory contains the pre-trained tensorflow models

The Source directory--- note: we will discuss DetectorActivity specifically

The build.gradle file

it is relatively complex --you can read on your own but, note that we are running with nativeBuildSystem = none and hence the following code that is inside it is executed which...automatically downloads the latest stable version of TensorFlow as an AAR and installs it in your project.

dependencies {

if (nativeBuildSystem == 'cmake' || nativeBuildSystem == 'none') {

compile 'org.tensorflow:tensorflow-android:+'

}

}

The ABSTRACT CameraActivity (both DetectorActivity and Classifier Activity extend this class) and its Layout File activity_camera

res/layout/activity_camera.xml <?xml version="1.0" encoding="utf-8"?> |

java (org.tensorflow.demo) / CameraActivity.java /*

|

The DetectorActivity class that implements the abstract CameraActivity class above -- so it only needs to

deal with the Classifier used and the implemented methods like:

onPreviewSizeChosen() = setups sizing and creates Classifier instance for type of classification in this case identification & localization

processImage() = will process the current image frame using setup Classifier instance

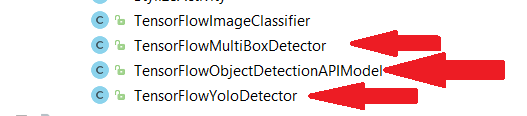

The default object detector in the Android Object Detection demo is now the 80-class Object Detection AP ISSD model & see also https://research.googleblog.com/2017/06/supercharge-your-computer-vision-models.html

The Yolo (you look only once) and original Multibox detectors remain available by modifying DetectorActivity.java

java (org.tensorflow.demo) / DetectorActivity.java and the purpose is to perform both identification

|

Some Important classes: TensorFlowObjectDetectionAPIModel and Classifier

TensorFlowObjectDetectionAPIModel -- used to create instance of Object Detector API classifier (which is |

java (org.tensorflow.demo) / Classifier.java which is a "helper" class that represents a TensorFlow Classifier --will load a pretrianed model /* Copyright 2015 The TensorFlow Authors. All Rights Reserved.

|

";

";