Metrics: Accuracy, Percision,Recall, Recognition Rate, Top-1, Top-N

How do you measure the "goodness" of a system, its success? There are a number of metrics and they are described below.

Accuraccy/Recognition Rate

= # correctly classified samples (for all classes) / # total number samples

note: can also report the recognition rate for each class separately

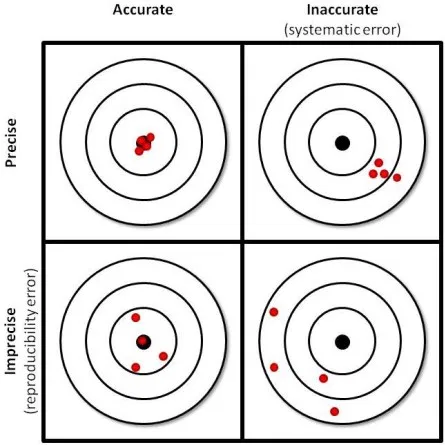

Recall and Percision

-

Accuracy = #correct classifications/total samples = TP / (TP + FN + FP + TN)

Recall = how often the deicision is correct = TP/(TP+FN)

-

Percision = how repeatable the result is. = TP/ (TP + FP)

-

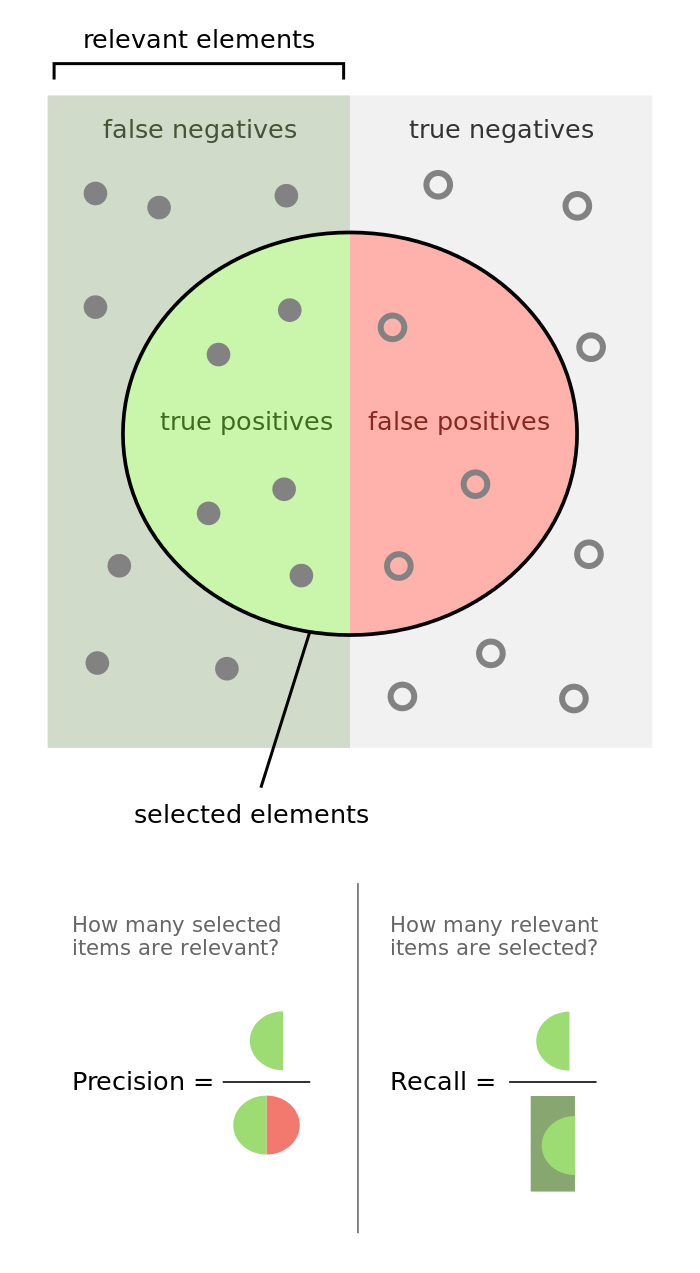

TP True Positive = correctly classified (said it was the class)

FN False Negative = incorrectly said it is NOT that class

TN True Negative = correctly said it is NOT that class

FP Fasle Positive = incorrectly said it IS that class

Here is an illustration

Example from wikipedia

Image = 12 Dogs + 6 cats

Reports 8 dogs foundTP = correctly find 5 dogs

FP = incorrectly says 3 dogs which are actually cats

For Dog Class:

Percision = 5/(5+3) = 5/8

Recall = 5/12 -- note there are total 12 dogs, 5 TP, and 7 FN