Cloud Vision API and Android

***** how to make REST requests to Cloud Vision API sending image(s) and getting responses*****

Resources:

|

|||||||

|

|||||||

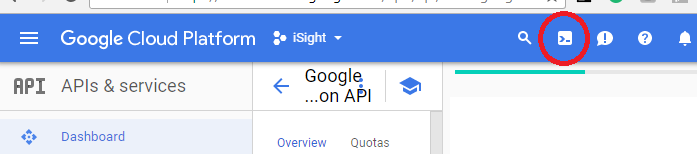

Navigate to the API Manager

|

|||||||

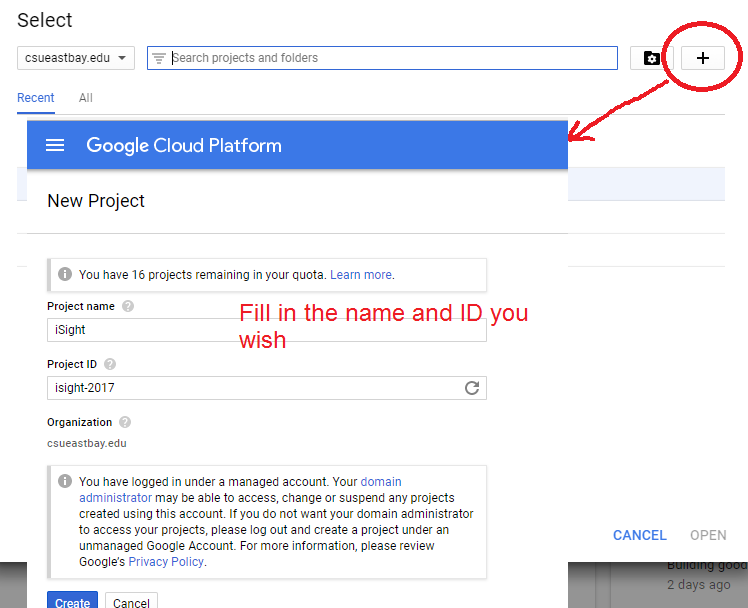

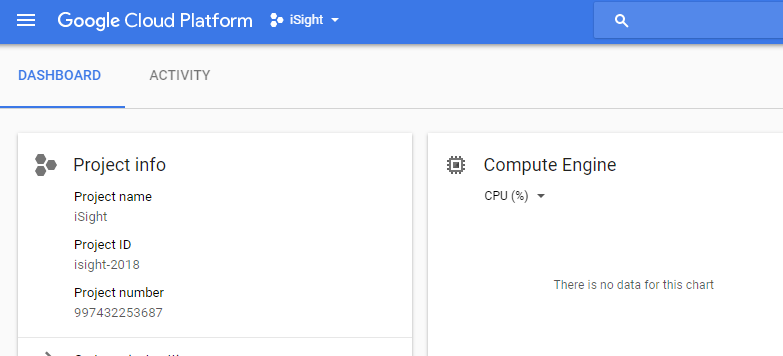

STEP 1: create project in Google Cloud (must have account) and enable Vision api

|

|||||||

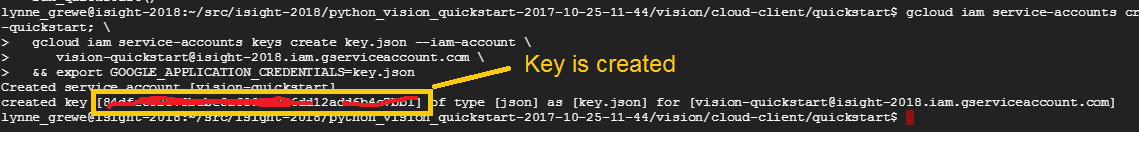

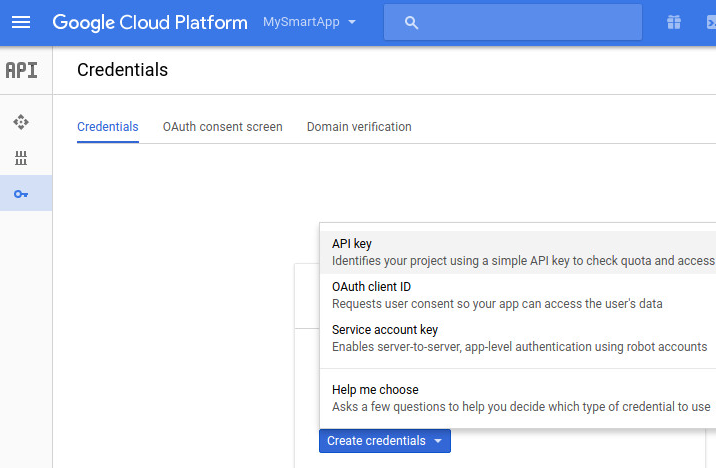

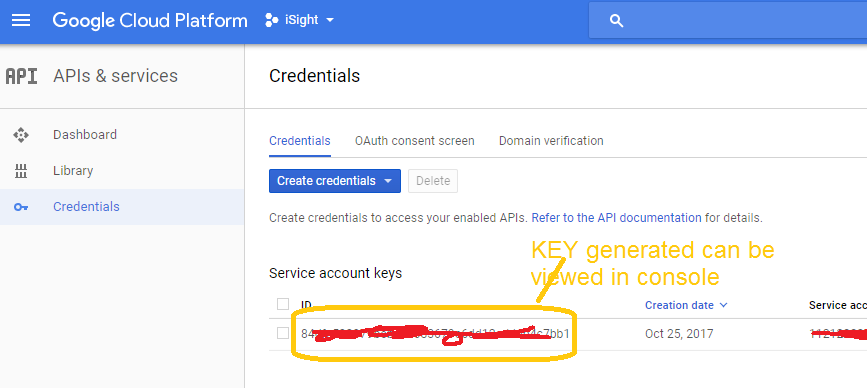

STEP 2: Create Credentials for accessing API

|

create service account, a service account key and set the key as our default credentials.type in the Google Cloud Console once you are logged in >>>>>to get to console hit the icon for it in navigation bar gcloud iam service-accounts create vision-quickstart; \ Here is example of me running this and the name of the service account is vision-quickstart for my project id isight-2018

|

alternative --- do it through the main console

|

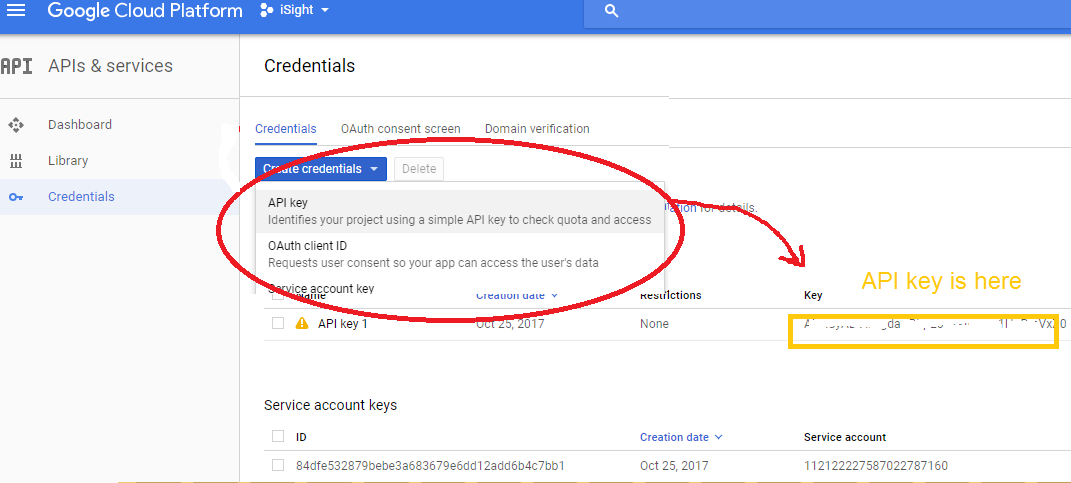

NOW YOU MUST GET THE Cloud Vision API Key (above was service key)

STEP 3: add dependencies to the Android project in the app module's build.gradle file:

compile 'com.google.api-client:google-api-client-android:1.22.0'compile 'com.google.apis:google-api-services-vision:v1-rev357-1.22.0'compile 'com.google.code.findbugs:jsr305:2.0.1'ALSO ADD apache commons I/O library

compile'commons-io:commons-io:2.5'

ALSO ADD gson

compile 'com.google.http-client:google-http-client-gson:1.19.0'

ALSO ADD to AndroidManifest file

<uses-permissionandroid:name="android.permission.INTERNET"/>

STEP 4: add following to code....specifying the API key, the HTTP transport, and the JSON factory it should use. As you might expect, the HTTP transport will be responsible for communicating with Google's servers, and the JSON factory will, among other things, be responsible for converting the JSON-based results the API generates into Java objects.

Google recommends that you use the

NetHttpTransportclass as the HTTP transport and theAndroidJsonFactoryclass as the JSON factory.

A) add API key that you got above as a string in your strings.xml file in res directory

<string name="CLOUD_VISION_API_KEY">AIzaSyADVfA-gdaCBbpE0HtXIikCcb1HoRmVxZ0</string>

STEP B) add imports

import com.google.api.client.extensions.android.http.AndroidHttp;

import com.google.api.client.extensions.android.json.AndroidJsonFactory;

import com.google.api.client.googleapis.json.GoogleJsonResponseException;

import com.google.api.client.http.HttpTransport;

import com.google.api.client.http.javanet.NetHttpTransport;

import com.google.api.client.json.JsonFactory;

import com.google.api.client.json.gson.GsonFactory;

import com.google.api.services.vision.v1.Vision;

import com.google.api.services.vision.v1.VisionRequest;

import com.google.api.services.vision.v1.VisionRequestInitializer;

import com.google.api.services.vision.v1.model.AnnotateImageRequest;

import com.google.api.services.vision.v1.model.BatchAnnotateImagesRequest;

import com.google.api.services.vision.v1.model.BatchAnnotateImagesResponse;

import com.google.api.services.vision.v1.model.EntityAnnotation;

import com.google.api.services.vision.v1.model.Feature;

import com.google.api.services.vision.v1.model.Image;

STEP C) In your Main Activity or wherever you want to be able to make requests to Cloud Vision API add the following to setup for this

The

Visionclass represents the Google API Client for Cloud Vision. Although it is possible to create an instance of the class using its constructor, doing so using theVision.Builderclass instead is easier and more flexible.While using the

Vision.Builderclass, you must remember to call thesetVisionRequestInitializer()method to specify your API key. The following code shows you how://add this class varialbe to your Activity (or whatever class) //declare Goolge Cloud Vision object

Vision vision; int count = 0; int COUNT_GOOLGE_CLOUD_TRIGGER = 300; //because Google cloud vision api takes a few seconds to responde // I only send requests out every 300//add this to your Activity's onCreate method //setup the Vision object Vision.Builder visionBuilder = new Vision.Builder( new NetHttpTransport(), new AndroidJsonFactory(), null); visionBuilder.setVisionRequestInitializer( new VisionRequestInitializer(R.string.CLOUD_VISION_API_KEY));

STEP D) add code to encode the image you have

Add code to convert OpenCV Mat image into a Java BitMap and then call the function callCloudVision(bitMap, imageMat)

note: optionally you can scale down the image -becasue Google Vision API is not fast (can take 2-4 seconds on devices I have run on with my home nework)

//convert imageMat to a bitmap

Bitmap bitmap = Bitmap.createBitmap(imageMat.width(), imageMat.height(),Bitmap.Config.ARGB_8888);;

Utils.matToBitmap(imageMat,bitmap); //OPTIONAL --- scale it down to 640x480 so it is smaller --- minimum for landmark detection

// see minimum required for various Google Vision API calls -https://cloud.google.com/vision/docs/supported-files

Bitmap smallerBitmap = this.scaleBitmapDown(bitmap, 640);

//call method to pass bitmap to cloudVision

try {

callCloudVision(bitmap, imageMat);

}catch(IOException i){Log.e(TAG, "it is failing"+i.getMessage());}

STEP E) add methods callCloudVision and

* this method uses the classes vision object to make a request to Google Cloud Vision API

* @param bitmap input Bitmap image

* @param imageMat output Mat to be displayed via OpenCV

*/

public void callCloudVision(final Bitmap bitmap, final Mat imageMat) throws IOException {

//REMOVE THE FOLLOWING if calling this method for pictures taken manually by user or pictures taken a few seconds

// apart --otherwise you will need something like this code so do not process every image of a video frame //only trigger processing through Google Cloud Vision API every COUNT_GOOGLE_CLOUD_TRIGGER frames

// because it takes a few seconds for the service to respond --so can not process every video frame

if(count % COUNT_GOOGLE_CLOUD_TRIGGER != 0)

return imageMat;

count++; //increment trigger counter

Log.i("TEST", "before cloud api call");

// Do the real work in an async task, because we need to use the network anyway

new AsyncTask<Object, Void, String>() {

@Override

protected String doInBackground(Object... params) {

try {

HttpTransport httpTransport = AndroidHttp.newCompatibleTransport();

JsonFactory jsonFactory = GsonFactory.getDefaultInstance();

VisionRequestInitializer requestInitializer =

new VisionRequestInitializer(getString(R.string.CLOUD_VISION_API_KEY)) {

/**

* We override this so we can inject important identifying fields into the HTTP

* headers. This enables use of a restricted cloud platform API key.

*/

@Override

protected void initializeVisionRequest(VisionRequest<?> visionRequest)

throws IOException {

super.initializeVisionRequest(visionRequest);

String packageName = getPackageName();

visionRequest.getRequestHeaders().set(getString(R.string.ANDROID_PACKAGE_HEADER), packageName);

String sig = PackageManagerUtils.getSignature(getPackageManager(), packageName);

visionRequest.getRequestHeaders().set(getString(R.string.ANDROID_CERT_HEADER), sig);

}

};

Vision.Builder builder = new Vision.Builder(httpTransport, jsonFactory, null);

builder.setVisionRequestInitializer(requestInitializer);

Vision vision = builder.build();

BatchAnnotateImagesRequest batchAnnotateImagesRequest =

new BatchAnnotateImagesRequest();

batchAnnotateImagesRequest.setRequests(new ArrayList<AnnotateImageRequest>() {{

AnnotateImageRequest annotateImageRequest = new AnnotateImageRequest();

// Add the image

Image base64EncodedImage = new Image();

// Convert the bitmap to a JPEG

// Just in case it's a format that Android understands but Cloud Vision

ByteArrayOutputStream byteArrayOutputStream = new ByteArrayOutputStream();

bitmap.compress(Bitmap.CompressFormat.JPEG, 90, byteArrayOutputStream);

byte[] imageBytes = byteArrayOutputStream.toByteArray();

// Base64 encode the JPEG

base64EncodedImage.encodeContent(imageBytes);

annotateImageRequest.setImage(base64EncodedImage);

// add the features we want

annotateImageRequest.setFeatures(new ArrayList<Feature>() {{

Feature labelDetection = new Feature();

labelDetection.setType("LABEL_DETECTION");

labelDetection.setMaxResults(10);

add(labelDetection);

}});

// Add the list of one thing to the request

add(annotateImageRequest);

}});

Vision.Images.Annotate annotateRequest =

vision.images().annotate(batchAnnotateImagesRequest);

// Due to a bug: requests to Vision API containing large images fail when GZipped.

annotateRequest.setDisableGZipContent(true);

Log.d(TAG, "created Cloud Vision request object, sending request");

BatchAnnotateImagesResponse response = annotateRequest.execute();

return convertResponseToString(response);

} catch (GoogleJsonResponseException e) {

Log.d(TAG, "failed to make API request because " + e.getContent());

} catch (IOException e) {

Log.d(TAG, "failed to make API request because of other IOException " +

e.getMessage());

}

return "Cloud Vision API request failed. Check logs for details.";

}

protected void onPostExecute(String result) {

mImageDetails.setText(result);

}

}.execute();

}

/**

* method to create a String from the response of a Google Vision Label Detection on an image

* @param response

* @return

*/

private String convertResponseToString(BatchAnnotateImagesResponse response) {

String message = "I found these things:\n\n";

List<EntityAnnotation> labels = response.getResponses().get(0).getLabelAnnotations();

if (labels != null) {

for (EntityAnnotation label : labels) {

message += String.format(Locale.US, "%.3f: %s", label.getScore(), label.getDescription());

message += "\n";

}

} else {

message += "nothing";

}

return message;

}

/**

* method to resize the original bitmap to produce a bitMap with maximum dimension of maxDimension on widht or height

* but, also keep aspect ration the same as original

* @param bitmap input original bitmap

* @param maxDimension maximum or either Width or Height of new rescaled image keeping original aspect ratio

* @return rescaled image with same aspect ratio

*/

public Bitmap scaleBitmapDown(Bitmap bitmap, int maxDimension) {

int originalWidth = bitmap.getWidth();

int originalHeight = bitmap.getHeight();

int resizedWidth = maxDimension;

int resizedHeight = maxDimension;

if (originalHeight > originalWidth) {

resizedHeight = maxDimension;

resizedWidth = (int) (resizedHeight * (float) originalWidth / (float) originalHeight);

} else if (originalWidth > originalHeight) {

resizedWidth = maxDimension;

resizedHeight = (int) (resizedWidth * (float) originalHeight / (float) originalWidth);

} else if (originalHeight == originalWidth) {

resizedHeight = maxDimension;

resizedWidth = maxDimension;

}

return Bitmap.createScaledBitmap(bitmap, resizedWidth, resizedHeight, false);

}

STEP 5: add this class PackageManagerUtils.java to your project

import android.content.pm.PackageInfo;

import android.content.pm.PackageManager;

import android.content.pm.Signature;

import android.support.annotation.NonNull;

import com.google.common.io.BaseEncoding;

import java.security.MessageDigest;

import java.security.NoSuchAlgorithmException;

/**

* Created by Lynne on 10/25/2017.

*/

/**

* Provides utility logic for getting the app's SHA1 signature. Used with restricted API keys.

*

*/

public class PackageManagerUtils {

/**

* Gets the SHA1 signature, hex encoded for inclusion with Google Cloud Platform API requests

*

* @param packageName Identifies the APK whose signature should be extracted.

* @return a lowercase, hex-encoded

*/

public static String getSignature(@NonNull PackageManager pm, @NonNull String packageName) {

try {

PackageInfo packageInfo = pm.getPackageInfo(packageName, PackageManager.GET_SIGNATURES);

if (packageInfo == null

|| packageInfo.signatures == null

|| packageInfo.signatures.length == 0

|| packageInfo.signatures[0] == null) {

return null;

}

return signatureDigest(packageInfo.signatures[0]);

} catch (PackageManager.NameNotFoundException e) {

return null;

}

}

private static String signatureDigest(Signature sig) {

byte[] signature = sig.toByteArray();

try {

MessageDigest md = MessageDigest.getInstance("SHA1");

byte[] digest = md.digest(signature);

return BaseEncoding.base16().lowerCase().encode(digest);

} catch (NoSuchAlgorithmException e) {

return null;

}

}

}

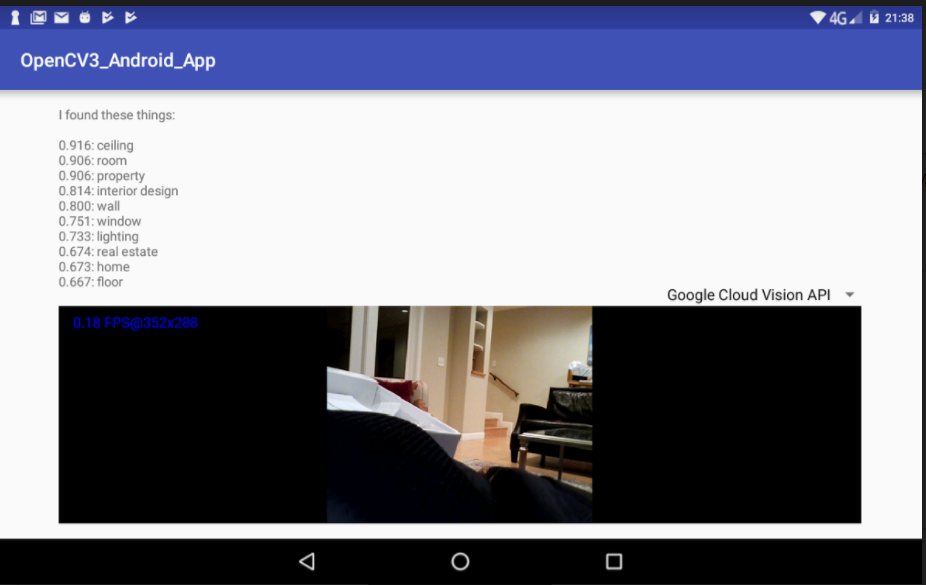

STEP 6: run the code ---- NOTE you can not run real time video -it takes seconds for Google Vision API to respond (on my devices on my home network) --so you have to process only every so often frames --see code above where I skip every 300

frames.