Output of a Classifier - Logits or Softmax and conversion between and % values

Logits Output of a Classifier

In machine learning, particularly in classification tasks, the output of a classifier is often referred to as "logits." Logits are the raw, unbounded scores produced by the final layer of a neural network before any activation function is applied.

Example: Classifying Five Types of Flowers

Consider a flower classification problem where we have five types of flowers: Rose, Tulip, Daisy, Sunflower, and Lily. When a neural network processes an input image of a flower, it outputs a vector of logits, which represents the model's unnormalized confidence scores for each flower type.

Possible Logits Vector:Logits = [1.5, −0.5, 2.0, 0.3, −1.2]

-

Interpretation:

-

Positive Logits: A positive logit indicates that the model has some confidence in that class. For example, the logit for Daisy is 2.0, suggesting that the model is quite confident that the flower is a Daisy.

-

Negative Logits: A negative logit indicates that the model believes that the corresponding class is less likely. For example, the logit for Tulip is -0.5, which suggests the model is not confident about the flower being a Tulip. It effectively means that the model considers this class to be less likely relative to other classes.

-

The logits serve as the input for the next step in the classification process, providing a way to quantify the model's confidence in each class.

Converting Logits to Percentages using Softmax

To convert the logits into a more interpretable format, such as probabilities or percentages, the softmax function is used. Softmax transforms the logits into a probability distribution across the classes.

Applying the Softmax Function

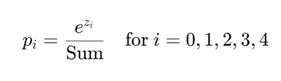

Given a vector of logits z=[z0,z1,z2,z3,z4] for five classes, the softmax function outputs probabilities where the pi sum to 1.0 using the following equations:

-

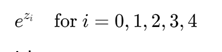

Exponentiate the logits:

-

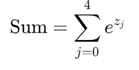

Calculate the sum of the exponentials:

-

Calculate softmax values:

The resulting probabilities (softmax values) sum to 1 and can then be converted to percentages by multiplying each probability by 100.

WHY do Softmax values represents probabilities????

WHAT WE WANT: The probabilities of each class should be such that they sum to 1.0 (or 100% in percentages) and that the relative values represent the relative likelihood of each class occuring.

The softmax function is specifically designed to transform logits into probabilities for several reasons:

-

Positive Transformation:

-

The softmax function exponentiates the logits. Exponentiation (using exe^{x}) converts all logits into positive values, regardless of whether the original logits were negative or positive. This is crucial because probabilities must be non-negative.

-

-

Relative Comparison:

-

By exponentiating, the softmax function emphasizes the differences between logits. A small increase in a logit results in a relatively larger increase in the corresponding probability. This allows the model to express its confidence in a more pronounced manner. For example, if one logit is significantly higher than others, its probability will dominate the resulting distribution.

-

-

Normalization:

-

After exponentiating the logits, the softmax function normalizes these values by dividing each exponentiated logit by the sum of all exponentiated logits. This normalization step ensures that the probabilities sum to 1

-

So, we have the softwax values that sum to 1.0 and represent the likelihood of each class relative to the others. They are probabilities.

Example

Using the previously mentioned logits vector:

Logits=[1.5,−0.5,2.0,0.3,−1.2]

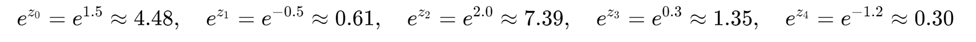

Exponentiate the logits:

Sum of exponentials:

![]()

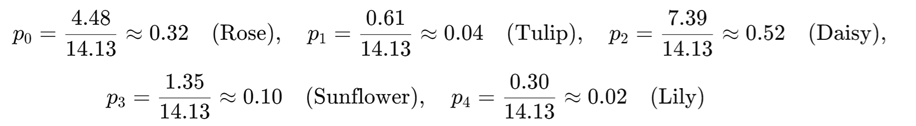

Calculate probabilities:

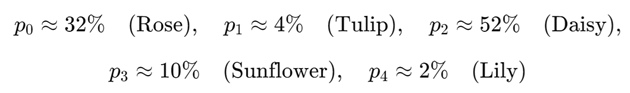

Convert to percentages: