Generic Poster Tracking

Keywords:

vision,

tracking,

poster,

randomized trees,

augmented reality

Author(s): Folker Wientapper,

Harald Wuest

Date: 2007-06-13

Summary: This tutorial shows you how to use instant reality's vision module for tracking textured planar regions with the "Generic Poster Tracker".

Introduction

The "Generic Poster Tracker" is a tool which allows the user to create a markerless tracking application for augmented reality very easily. The underlying tracking method assumes that the object to be tracked is a well textured and planar surface. The tracking methods used are a randomized trees based keypoint classifier for pose initialization and a KLT tracker.

Warning: The Postertracker will only run for 3000 Frames without restrictions in the unlicensed system. After 3 to 5 minutes the framerate will be limited to 3 frames per second

In order to create an AR application based on this tracking technology, the user has to carry out the following three steps, which will be explained in more detail subsequently:

- acquire a reference image of the object,

- perform an offline training phase and

- embed the tracker into the AR application.

Finally, some advanced features and tricks are explained, which skilled users might find useful.

Creating a reference image

As a first step, a reference image of the object must be taken. It is recommended to produce the reference image with the same camera that is used later for tracking. One possibility is to use the image capturing software, which is (in most cases) provided by the camera manufacturer. Alternatively, this can also be achieved with instant reality's vision module 'testpm4.exe'. For this purpose, the user basically needs to load a 'VideoSourceAction' for image acquisition, an 'ImageWriterAction' for storing the image on hard disk and, finally, a window ('MegaWidgetActionPipe') to inspect the live video stream in order to choose an appropriate image. We will now explain the procedure of the latter option.

After having started the vision module, one can load the following '.pm' file which already contains the abovementioned components:

Code: An image acquision '.pm' file

<?xml version="1.0" encoding="UTF-8" standalone="no" ?>

<VisionLib2 Version="2.0">

<ActionPipe category="Action" name="main">

<VideoSourceAction__ImageT__RGB_Frame category="Action" enabled="1" name="VideoSourceAction">

<Keys size="2">

<key val="VideoSourceImage" what="image live, Image*, out"/>

<key val="" what="intrinsic parameters to be modified, out"/>

</Keys>

<ActionConfig source_url="ds://mode=1280x960xBI_RGB;unit=0;"/>

</VideoSourceAction__ImageT__RGB_Frame>

<ImageWriterAction__ImageT__RGB_Frame category="Action" enabled="0" name="Action">

<Keys size="2">

<key val="VideoSourceImage" what="image to write, image, in"/>

<key val="" what="Frame number (for sequences) as DataTemplate<Int32>, out"/>

</Keys>

<ActionConfig Filename_postfix_ala_images_type="jpg" Filename_prefix_of_the_images="C:\TestPosterTracker\refImage" Image_Sequence_boolean="0" Number_of_digits_to_write="4"/>

</ImageWriterAction__ImageT__RGB_Frame>

<MegaWidgetActionPipe height="245" name="WidgetActionPipe" show="1" viewerid="0" width="328" x="0" y="0">

<Keys size="8">

<key val="mwap" what="internal use, do not set or change!"/>

<key val="VideoSourceImage" what="background, Image*, in"/>

<key val="" what="2D geometry, GeometryContainer, in"/>

<key val="" what="3D geometry, GeometryContainer, in"/>

<key val="" what="Camera extrinsic parameters, ExtrinsicData, in"/>

<key val="" what="Camera intrinsic parameters, IntrinsicDataPerspective, in"/>

<key val="" what="OSG 3D Models, OSGNodeData, in"/>

<key val="" what="caption string, StringData<string>, in"/>

</Keys>

</MegaWidgetActionPipe>

</ActionPipe>

<DataSet key="">

</DataSet>

<Activity name="NOP"/>

</VisionLib2>

Before taking the image, create a folder where the image should be stored (e.g.: "C:\TestPosterTracker\").

Now, the following steps need to be done:- Double-click on VideoSourceAction to set camera parameters, esp. the resolution (640x480 is ok)

- Press 'init'

- Press 'run' and watch the video while adjusting the camera position

- Press 'stop' when an acceptable camera position has been found

- Disable the VideoSourceAction to avoid acquiring new images

- Double click on ImageWriterAction to set the desired path and filename of the image (e.g.: Filename_prefix_of_the_images="C:\TestPosterTracker\refImage")

- Enable the ImageWriterAction

- Press 'run once' to save the image at the desired destination

Regardless of which software is used to create the image, the user should pay attention to create the reference image 'properly', i.e.:

- The reference image should be taken with the viewing field perpendicular to the surface of the object. This is the case, when no projective distortions are observable, i.e. parallel lines on the object should remain parallel in the captured image

- Furthermore it is advantageous not to capture any background of the object (or regions that do not belong to the planar part of the object)

Training phase

The whole generation process can be performed with an Activity plugin in the InstantVision application. The Activity is calles GenericPosterTracker, and can be started by clicking on the according Activity button.Simple Mode

For the simple mode first click on add Poster. A new window opens and you have to click on the image not loaded button. Then you can pick a picture. Now you can decide whether you want to change the data path or not. By default the data path is in the same directory as the picture you picked as poster. Afterwards click close and the poster is loaded. Then click the process all button and wait until the process is finished. Finally press create tracker to create the .x3d and the .pm file. They can be found in the directory of the picture.

The tracker learns by observing randomized poses of the image, so activate update viewer if you want to look, if everything goes fine - but then it takes more time. A progress area shows the actual step. First of all, keypoints are searched, this takes about 2 minutes. In the next step, the activity produces patches from these points (~15 minutes), at last the randomized trees are produced (~10 minutes).

It is also possible to change the number of trees. In fact, this is already an advanced option, but this variable changes the manufacturing time at most. The recognition rate is very high for 20 trees, but could already be high enough for 10 trees. So if you're more common with the behaviour of the tracker, this option is not yet that advanced - the more the better goes just asymptotically (1-exp(-ax)).

Advanced Mode

The advanced mode allows the user to change more options. Roughly spoken it allows to do everthing from the simple mode step by step with the ability to change some parameters. This widget sheet is seperated into 4 boxes,

- Poster,

- Intrinsic Data,

- Generate 3D-Points,

- Generate Tracking Data.

They represent the different steps and the appropriated options. Every step can be skipped by beeing executed automatically like in the simple mode.

Poster

First of all, the image is loaded. Now you can scroll the image for best view. After clicking the mark area button you can choose the area of the image that will be cropped due to some learning advantages (mark area with left mouse button pressed and move area with right button pressed). So if there is a border in the picture that is not in the tracked poster, you can cut it of. Afterwards just click crop. Then you can save this poster. Like in the simple mode the best is to choose a filename without any extension in an empty path. Take a look into section Created Files for more information.

For skipping this step you can instantly click save or start with the next step - a saving Dialog will appear. The Image will be cropped with maximum size and equally distributed borders.

Intrinsic Data

With the load button it is possible to chose your own intrinsic data. If you skip this step standard intrinsic data will be used.

Generate 3D-Points

All the learning parameters for the amount of the random poses can be changed here. If you change the maximum and minumum values you will instantaneously see this pose in the viewer. The number of keypoints is an interesting parameter, so try what happens, if you change it, it could be usefull for larger posters to raise this number, but be careful, it's linear with the generating time of patches and trees.

It is recommended to produce the keypoints randomly.

Generate Tracking Data

Generate Patches is moreless a debug-step, you will need just the trees.

After you changed the options click process all, wait a few minutes and finally click create tracker to create the .x3d and the .pm files.

Expert Properties

Experts may also change the activity attributes. You could change the camera height and width, but the result corresponds with the patch size, so try what happens. The number of random poses is an interesting value, it limits the number of random poses for the keypoint searching step, sometimes the results are better with more points, sometimes with less,...

Search depth gives the maximum level of the keypoints, in other words the invariance for zoom. It could make sense for high resolution pictures to raise this value.

Created Files

The files are located in the same directory as the chosen image. If there was nothing else than the picture, the structure will be the following:

- ImageName.jpg, the image

- posterTracker.pm, the picmod file for the tracking

- PosterTracker.x3d, the file for the poster tracking

- ImageNameData/PictureName.wrl, the model with the cropped image as texture

- ImageNameData/treeXXXX.txt, the produced randomized trees

- ImageNameData/AllPatchesXXXX.rtp, the produced patches (optional)

Using the tracker and embedding it into the AR application

The initially chosen filename with extension .x3d is a tracker for the learned poster and the chosen properties.

The generated x3d-file uses the camera parameters which are estimated by the tracking module and uses those to render the model contained in the generated wrl-file. After the generation step, this file simply contains the 3D model of the cropped image as textures. The image is located in the x/y-plane, and the world origin is in the center of the image. If the x3d-file is executed with InstantPlayer, the overlay on the real camera image is simply the reference image. This can be used to validate the poster tracking quality. For more impressive augmentations, the wrl-file can be replaced with something more fancy.

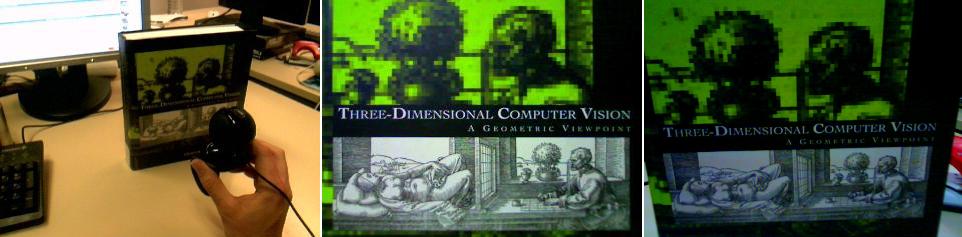

Here is a link to the tracking video!

Advanced features and tricks

- The standard camera is a DirectShow device. If other cameras are used, the VideoSourceAction in the pm-file needs to be adapted. To obtain the correct configuration of a connected camera, the InstantVision GUI can be used. Just create a VideoSourceAction, select the correct device and resolution, and check if the acquired image looks fine. If everything is ok, save the configuration and copy/paste the VideoSouceAction into your poster tracker pm-file.

- It is recommended to use not too big images. An ImageResizeAction converts all camera images into a resolution of 320x240. If your DirectShow device supports resolutions of 320x240, the ImageResizeAction in the pm-file can be removed.

- By default only 4 pyramid levels of the reference image are used. If the reference image is very large, this can be not enough, especially is the reference image appears very small in the camera image. The number of pyramid levels can be extended by adjustinf the attribute levels of the BuildPyramidAction.

- By default 20 trees are use to initialize the poster tracker. Since the trees recquire quite a bit of memory, it can be usefull to decrease the number of trees. Therefore some trees in the Data directory can be simply deleted or moved somehwere else. In many szenarios good results could be observed if only 10 trees were used.

- If you want to track more than one poster just repeat the first step of the simple method for every poster you want to add.

- By default a teapot is projected on the poster. If you want to change this open the .x3d file with an xml editor and replace the teapot by something else.